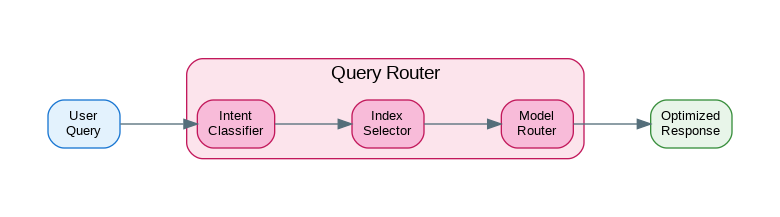

Introduction: Not all queries are equal—some need fast, cheap responses while others require deep reasoning. Query routing intelligently directs requests to the right model, index, or processing pipeline based on query characteristics. Route simple factual questions to smaller models, complex reasoning to GPT-4, and domain-specific queries to specialized indexes. This approach optimizes both cost and quality: you’re not paying for GPT-4 to answer “what time is it?” while ensuring complex queries get the reasoning power they need. This guide covers practical routing strategies: intent classification, semantic routing, complexity-based model selection, and multi-index retrieval routing. Whether you’re building a cost-efficient chatbot or a sophisticated RAG system, intelligent routing can reduce costs by 60-80% while maintaining or improving response quality.

Intent-Based Routing

from dataclasses import dataclass, field

from typing import Any, Optional, Callable

from abc import ABC, abstractmethod

from enum import Enum

class QueryIntent(Enum):

"""Query intent categories."""

FACTUAL = "factual" # Simple fact lookup

ANALYTICAL = "analytical" # Requires reasoning

CREATIVE = "creative" # Creative writing

CODE = "code" # Code generation

CONVERSATIONAL = "conversational" # Chitchat

SEARCH = "search" # Information retrieval

TASK = "task" # Action/task execution

@dataclass

class RoutingDecision:

"""Routing decision."""

intent: QueryIntent

model: str

index: str = None

confidence: float = 1.0

metadata: dict = field(default_factory=dict)

class IntentClassifier(ABC):

"""Abstract intent classifier."""

@abstractmethod

async def classify(self, query: str) -> tuple[QueryIntent, float]:

"""Classify query intent."""

pass

class KeywordIntentClassifier(IntentClassifier):

"""Rule-based intent classification."""

def __init__(self):

self.patterns = {

QueryIntent.CODE: [

"write code", "implement", "function", "class",

"debug", "fix this code", "python", "javascript"

],

QueryIntent.FACTUAL: [

"what is", "who is", "when did", "where is",

"how many", "define", "what does"

],

QueryIntent.ANALYTICAL: [

"why", "explain", "analyze", "compare",

"what are the implications", "how does this affect"

],

QueryIntent.CREATIVE: [

"write a story", "create", "imagine",

"generate ideas", "brainstorm"

],

QueryIntent.CONVERSATIONAL: [

"hello", "hi", "how are you", "thanks",

"bye", "good morning"

],

QueryIntent.SEARCH: [

"find", "search", "look up", "show me",

"list", "what are some"

],

QueryIntent.TASK: [

"schedule", "remind", "send", "book",

"order", "calculate"

]

}

async def classify(self, query: str) -> tuple[QueryIntent, float]:

"""Classify using keyword matching."""

query_lower = query.lower()

scores = {}

for intent, keywords in self.patterns.items():

score = sum(1 for kw in keywords if kw in query_lower)

if score > 0:

scores[intent] = score

if not scores:

return QueryIntent.CONVERSATIONAL, 0.5

best_intent = max(scores, key=scores.get)

confidence = min(scores[best_intent] / 3, 1.0)

return best_intent, confidence

class LLMIntentClassifier(IntentClassifier):

"""LLM-based intent classification."""

def __init__(self, llm_client: Any):

self.llm = llm_client

self.prompt_template = """Classify the following query into one of these categories:

- FACTUAL: Simple fact lookup or definition

- ANALYTICAL: Requires reasoning, analysis, or explanation

- CREATIVE: Creative writing or idea generation

- CODE: Code generation, debugging, or programming help

- CONVERSATIONAL: Casual conversation or greetings

- SEARCH: Information retrieval or listing

- TASK: Action execution or task completion

Query: {query}

Respond with just the category name and confidence (0-1):

Category:

Confidence: """

async def classify(self, query: str) -> tuple[QueryIntent, float]:

"""Classify using LLM."""

prompt = self.prompt_template.format(query=query)

response = await self.llm.complete(prompt)

# Parse response

lines = response.content.strip().split('\n')

category = "CONVERSATIONAL"

confidence = 0.5

for line in lines:

if line.startswith("Category:"):

category = line.split(":")[1].strip().upper()

elif line.startswith("Confidence:"):

try:

confidence = float(line.split(":")[1].strip())

except ValueError:

pass

try:

intent = QueryIntent[category]

except KeyError:

intent = QueryIntent.CONVERSATIONAL

return intent, confidence

class EmbeddingIntentClassifier(IntentClassifier):

"""Embedding-based intent classification."""

def __init__(

self,

embedding_model: Any,

examples: dict[QueryIntent, list[str]]

):

self.embedding_model = embedding_model

self.examples = examples

self.intent_embeddings: dict[QueryIntent, list] = {}

async def initialize(self):

"""Pre-compute example embeddings."""

for intent, queries in self.examples.items():

embeddings = await self.embedding_model.embed(queries)

self.intent_embeddings[intent] = embeddings

async def classify(self, query: str) -> tuple[QueryIntent, float]:

"""Classify using embedding similarity."""

import numpy as np

query_embedding = await self.embedding_model.embed([query])

query_embedding = np.array(query_embedding[0])

best_intent = QueryIntent.CONVERSATIONAL

best_score = -1

for intent, embeddings in self.intent_embeddings.items():

embeddings_array = np.array(embeddings)

# Compute similarities

similarities = np.dot(embeddings_array, query_embedding) / (

np.linalg.norm(embeddings_array, axis=1) * np.linalg.norm(query_embedding)

)

# Use max similarity

max_sim = np.max(similarities)

if max_sim > best_score:

best_score = max_sim

best_intent = intent

return best_intent, float(best_score) Model Routing

from dataclasses import dataclass

from typing import Any, Optional

from enum import Enum

class ModelTier(Enum):

"""Model tiers by capability/cost."""

FAST = "fast" # GPT-3.5, Claude Instant

BALANCED = "balanced" # GPT-4-turbo, Claude 3 Sonnet

POWERFUL = "powerful" # GPT-4, Claude 3 Opus

@dataclass

class ModelConfig:

"""Model configuration."""

name: str

tier: ModelTier

cost_per_1k_tokens: float

max_tokens: int

supports_functions: bool = True

supports_vision: bool = False

class ModelRouter:

"""Route queries to appropriate models."""

def __init__(self):

self.models = {

ModelTier.FAST: ModelConfig(

name="gpt-3.5-turbo",

tier=ModelTier.FAST,

cost_per_1k_tokens=0.0015,

max_tokens=16384

),

ModelTier.BALANCED: ModelConfig(

name="gpt-4-turbo",

tier=ModelTier.BALANCED,

cost_per_1k_tokens=0.01,

max_tokens=128000

),

ModelTier.POWERFUL: ModelConfig(

name="gpt-4",

tier=ModelTier.POWERFUL,

cost_per_1k_tokens=0.03,

max_tokens=8192

)

}

# Intent to tier mapping

self.intent_tiers = {

QueryIntent.FACTUAL: ModelTier.FAST,

QueryIntent.CONVERSATIONAL: ModelTier.FAST,

QueryIntent.SEARCH: ModelTier.FAST,

QueryIntent.TASK: ModelTier.BALANCED,

QueryIntent.CODE: ModelTier.BALANCED,

QueryIntent.ANALYTICAL: ModelTier.POWERFUL,

QueryIntent.CREATIVE: ModelTier.BALANCED

}

def route(

self,

intent: QueryIntent,

query_length: int = 0,

require_functions: bool = False,

require_vision: bool = False

) -> ModelConfig:

"""Route to appropriate model."""

# Get base tier from intent

tier = self.intent_tiers.get(intent, ModelTier.BALANCED)

# Upgrade tier if query is complex (long)

if query_length > 2000 and tier == ModelTier.FAST:

tier = ModelTier.BALANCED

# Get model config

model = self.models[tier]

# Check requirements

if require_functions and not model.supports_functions:

# Upgrade to model with function support

for upgrade_tier in [ModelTier.BALANCED, ModelTier.POWERFUL]:

if self.models[upgrade_tier].supports_functions:

model = self.models[upgrade_tier]

break

if require_vision and not model.supports_vision:

# Use vision-capable model

model = ModelConfig(

name="gpt-4-vision-preview",

tier=ModelTier.POWERFUL,

cost_per_1k_tokens=0.03,

max_tokens=128000,

supports_vision=True

)

return model

class ComplexityRouter:

"""Route based on query complexity."""

def __init__(self, llm_client: Any = None):

self.llm = llm_client

self.complexity_prompt = """Rate the complexity of answering this query on a scale of 1-10:

1-3: Simple factual lookup or basic task

4-6: Moderate reasoning or multi-step task

7-10: Complex analysis, creative work, or expert knowledge

Query: {query}

Respond with just the number:"""

async def estimate_complexity(self, query: str) -> int:

"""Estimate query complexity."""

# Quick heuristics first

complexity = 3

# Length-based

if len(query) > 500:

complexity += 2

elif len(query) > 200:

complexity += 1

# Keyword-based

complex_keywords = [

"analyze", "compare", "explain why", "implications",

"trade-offs", "optimize", "design", "architect"

]

for keyword in complex_keywords:

if keyword in query.lower():

complexity += 1

# Question count

question_count = query.count("?")

complexity += min(question_count - 1, 2)

# Cap at 10

complexity = min(complexity, 10)

# Use LLM for borderline cases

if self.llm and 4 <= complexity <= 6:

prompt = self.complexity_prompt.format(query=query)

response = await self.llm.complete(prompt)

try:

llm_complexity = int(response.content.strip())

complexity = (complexity + llm_complexity) // 2

except ValueError:

pass

return complexity

async def route(self, query: str) -> ModelTier:

"""Route based on complexity."""

complexity = await self.estimate_complexity(query)

if complexity <= 3:

return ModelTier.FAST

elif complexity <= 6:

return ModelTier.BALANCED

else:

return ModelTier.POWERFUL

class CostAwareRouter:

"""Route with cost optimization."""

def __init__(

self,

budget_per_request: float = 0.01,

daily_budget: float = 100.0

):

self.budget_per_request = budget_per_request

self.daily_budget = daily_budget

self.daily_spent = 0.0

self.last_reset = None

self.model_router = ModelRouter()

def _estimate_cost(

self,

model: ModelConfig,

input_tokens: int,

output_tokens: int

) -> float:

"""Estimate request cost."""

total_tokens = input_tokens + output_tokens

return (total_tokens / 1000) * model.cost_per_1k_tokens

async def route(

self,

intent: QueryIntent,

query: str,

expected_output_tokens: int = 500

) -> ModelConfig:

"""Route with cost awareness."""

import datetime

# Reset daily budget

today = datetime.date.today()

if self.last_reset != today:

self.daily_spent = 0.0

self.last_reset = today

# Get ideal model

ideal_model = self.model_router.route(intent, len(query))

# Estimate cost

input_tokens = len(query) // 4

estimated_cost = self._estimate_cost(

ideal_model,

input_tokens,

expected_output_tokens

)

# Check budgets

if estimated_cost > self.budget_per_request:

# Downgrade model

for tier in [ModelTier.BALANCED, ModelTier.FAST]:

downgraded = self.model_router.models[tier]

cost = self._estimate_cost(

downgraded,

input_tokens,

expected_output_tokens

)

if cost <= self.budget_per_request:

return downgraded

if self.daily_spent + estimated_cost > self.daily_budget:

# Force cheapest model

return self.model_router.models[ModelTier.FAST]

self.daily_spent += estimated_cost

return ideal_modelIndex Routing

from dataclasses import dataclass

from typing import Any, Optional

@dataclass

class IndexConfig:

"""Index configuration."""

name: str

description: str

domains: list[str]

embedding_model: str

chunk_size: int

class SemanticIndexRouter:

"""Route queries to appropriate indexes."""

def __init__(

self,

embedding_model: Any,

indexes: list[IndexConfig]

):

self.embedding_model = embedding_model

self.indexes = {idx.name: idx for idx in indexes}

self.index_embeddings: dict[str, list] = {}

async def initialize(self):

"""Pre-compute index description embeddings."""

for name, config in self.indexes.items():

# Embed description and domain keywords

texts = [config.description] + config.domains

embeddings = await self.embedding_model.embed(texts)

self.index_embeddings[name] = embeddings

async def route(

self,

query: str,

top_k: int = 1

) -> list[tuple[str, float]]:

"""Route query to best indexes."""

import numpy as np

query_embedding = await self.embedding_model.embed([query])

query_embedding = np.array(query_embedding[0])

scores = []

for name, embeddings in self.index_embeddings.items():

embeddings_array = np.array(embeddings)

similarities = np.dot(embeddings_array, query_embedding) / (

np.linalg.norm(embeddings_array, axis=1) * np.linalg.norm(query_embedding)

)

# Use max similarity across description and domains

max_sim = np.max(similarities)

scores.append((name, float(max_sim)))

# Sort by score

scores.sort(key=lambda x: x[1], reverse=True)

return scores[:top_k]

class LLMIndexRouter:

"""Use LLM to route to indexes."""

def __init__(

self,

llm_client: Any,

indexes: list[IndexConfig]

):

self.llm = llm_client

self.indexes = indexes

# Build index descriptions

self.index_descriptions = "\n".join(

f"- {idx.name}: {idx.description}"

for idx in indexes

)

self.prompt_template = """Given these available knowledge indexes:

{indexes}

Which index(es) should be searched to answer this query?

Query: {query}

Respond with the index name(s), one per line. If multiple indexes are relevant, list them in order of relevance."""

async def route(

self,

query: str,

top_k: int = 2

) -> list[str]:

"""Route using LLM."""

prompt = self.prompt_template.format(

indexes=self.index_descriptions,

query=query

)

response = await self.llm.complete(prompt)

# Parse response

lines = response.content.strip().split('\n')

selected = []

index_names = {idx.name.lower(): idx.name for idx in self.indexes}

for line in lines:

line = line.strip().lower()

# Remove common prefixes

for prefix in ['- ', '* ', '1. ', '2. ', '3. ']:

if line.startswith(prefix):

line = line[len(prefix):]

if line in index_names:

selected.append(index_names[line])

return selected[:top_k]

class HybridIndexRouter:

"""Combine semantic and keyword routing."""

def __init__(

self,

semantic_router: SemanticIndexRouter,

keyword_mappings: dict[str, list[str]] # index -> keywords

):

self.semantic_router = semantic_router

self.keyword_mappings = keyword_mappings

async def route(

self,

query: str,

top_k: int = 2

) -> list[tuple[str, float]]:

"""Route using both semantic and keyword matching."""

# Keyword matching

keyword_scores = {}

query_lower = query.lower()

for index_name, keywords in self.keyword_mappings.items():

score = sum(1 for kw in keywords if kw in query_lower)

if score > 0:

keyword_scores[index_name] = score / len(keywords)

# Semantic matching

semantic_results = await self.semantic_router.route(query, top_k=len(self.keyword_mappings))

# Combine scores

combined = {}

for name, score in semantic_results:

combined[name] = score * 0.7 # 70% weight to semantic

for name, score in keyword_scores.items():

if name in combined:

combined[name] += score * 0.3 # 30% weight to keyword

else:

combined[name] = score * 0.3

# Sort and return

results = sorted(combined.items(), key=lambda x: x[1], reverse=True)

return results[:top_k]Unified Query Router

from dataclasses import dataclass

from typing import Any, Optional, Callable

@dataclass

class RoutingResult:

"""Complete routing result."""

intent: QueryIntent

model: ModelConfig

indexes: list[str]

confidence: float

reasoning: str = ""

class UnifiedRouter:

"""Unified query routing system."""

def __init__(

self,

intent_classifier: IntentClassifier,

model_router: ModelRouter,

index_router: SemanticIndexRouter = None

):

self.intent_classifier = intent_classifier

self.model_router = model_router

self.index_router = index_router

async def route(

self,

query: str,

require_functions: bool = False,

require_vision: bool = False

) -> RoutingResult:

"""Route query through all routers."""

# Classify intent

intent, confidence = await self.intent_classifier.classify(query)

# Route to model

model = self.model_router.route(

intent,

len(query),

require_functions,

require_vision

)

# Route to indexes (if applicable)

indexes = []

if self.index_router and intent in [QueryIntent.SEARCH, QueryIntent.FACTUAL, QueryIntent.ANALYTICAL]:

index_results = await self.index_router.route(query, top_k=2)

indexes = [name for name, score in index_results if score > 0.5]

return RoutingResult(

intent=intent,

model=model,

indexes=indexes,

confidence=confidence,

reasoning=f"Intent: {intent.value}, Model: {model.name}"

)

class ConditionalRouter:

"""Route with conditional logic."""

def __init__(self):

self.rules: list[tuple[Callable, RoutingResult]] = []

self.default_result: RoutingResult = None

def add_rule(

self,

condition: Callable[[str], bool],

result: RoutingResult

):

"""Add routing rule."""

self.rules.append((condition, result))

def set_default(self, result: RoutingResult):

"""Set default routing result."""

self.default_result = result

async def route(self, query: str) -> RoutingResult:

"""Route using rules."""

for condition, result in self.rules:

if condition(query):

return result

return self.default_result

class CascadingRouter:

"""Try routers in sequence until confident."""

def __init__(

self,

routers: list[tuple[IntentClassifier, float]], # (router, min_confidence)

model_router: ModelRouter

):

self.routers = routers

self.model_router = model_router

async def route(self, query: str) -> RoutingResult:

"""Route through cascade."""

for classifier, min_confidence in self.routers:

intent, confidence = await classifier.classify(query)

if confidence >= min_confidence:

model = self.model_router.route(intent, len(query))

return RoutingResult(

intent=intent,

model=model,

indexes=[],

confidence=confidence

)

# Fallback to last router's result

intent, confidence = await self.routers[-1][0].classify(query)

model = self.model_router.route(intent, len(query))

return RoutingResult(

intent=intent,

model=model,

indexes=[],

confidence=confidence

)

class ABTestRouter:

"""A/B test different routing strategies."""

def __init__(

self,

router_a: UnifiedRouter,

router_b: UnifiedRouter,

traffic_split: float = 0.5

):

self.router_a = router_a

self.router_b = router_b

self.traffic_split = traffic_split

self.metrics = {

"a": {"count": 0, "latency": [], "cost": []},

"b": {"count": 0, "latency": [], "cost": []}

}

async def route(self, query: str) -> tuple[RoutingResult, str]:

"""Route with A/B testing."""

import random

variant = "a" if random.random() < self.traffic_split else "b"

router = self.router_a if variant == "a" else self.router_b

result = await router.route(query)

self.metrics[variant]["count"] += 1

return result, variant

def record_outcome(

self,

variant: str,

latency_ms: float,

cost: float

):

"""Record outcome for analysis."""

self.metrics[variant]["latency"].append(latency_ms)

self.metrics[variant]["cost"].append(cost)

def get_stats(self) -> dict:

"""Get A/B test statistics."""

import numpy as np

stats = {}

for variant in ["a", "b"]:

m = self.metrics[variant]

stats[variant] = {

"count": m["count"],

"avg_latency": np.mean(m["latency"]) if m["latency"] else 0,

"avg_cost": np.mean(m["cost"]) if m["cost"] else 0,

"total_cost": sum(m["cost"])

}

return statsProduction Routing Service

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Optional

import time

app = FastAPI()

class RouteRequest(BaseModel):

query: str

require_functions: bool = False

require_vision: bool = False

user_id: Optional[str] = None

class RouteResponse(BaseModel):

intent: str

model: str

indexes: list[str]

confidence: float

estimated_cost: float

# Initialize routers

keyword_classifier = KeywordIntentClassifier()

model_router = ModelRouter()

cost_router = CostAwareRouter(budget_per_request=0.05, daily_budget=100.0)

# Index configurations

indexes = [

IndexConfig(

name="documentation",

description="Technical documentation and API references",

domains=["api", "sdk", "reference", "docs"],

embedding_model="text-embedding-3-small",

chunk_size=512

),

IndexConfig(

name="knowledge_base",

description="General knowledge and FAQ answers",

domains=["faq", "help", "support", "how to"],

embedding_model="text-embedding-3-small",

chunk_size=1024

),

IndexConfig(

name="code_examples",

description="Code snippets and implementation examples",

domains=["code", "example", "implementation", "snippet"],

embedding_model="text-embedding-3-small",

chunk_size=2048

)

]

# Unified router

unified_router = UnifiedRouter(

intent_classifier=keyword_classifier,

model_router=model_router,

index_router=None # Would initialize with embedding model

)

@app.post("/v1/route")

async def route_query(request: RouteRequest) -> RouteResponse:

"""Route a query."""

start = time.time()

result = await unified_router.route(

request.query,

request.require_functions,

request.require_vision

)

# Estimate cost

input_tokens = len(request.query) // 4

estimated_cost = (input_tokens + 500) / 1000 * result.model.cost_per_1k_tokens

latency = (time.time() - start) * 1000

return RouteResponse(

intent=result.intent.value,

model=result.model.name,

indexes=result.indexes,

confidence=result.confidence,

estimated_cost=estimated_cost

)

@app.post("/v1/route/cost-aware")

async def route_cost_aware(request: RouteRequest) -> RouteResponse:

"""Route with cost awareness."""

intent, confidence = await keyword_classifier.classify(request.query)

model = await cost_router.route(

intent,

request.query,

expected_output_tokens=500

)

input_tokens = len(request.query) // 4

estimated_cost = (input_tokens + 500) / 1000 * model.cost_per_1k_tokens

return RouteResponse(

intent=intent.value,

model=model.name,

indexes=[],

confidence=confidence,

estimated_cost=estimated_cost

)

@app.get("/v1/route/stats")

async def get_routing_stats():

"""Get routing statistics."""

return {

"daily_spent": cost_router.daily_spent,

"daily_budget": cost_router.daily_budget,

"budget_remaining": cost_router.daily_budget - cost_router.daily_spent

}

@app.get("/health")

async def health():

return {"status": "healthy"}References

- LangChain Routing: https://python.langchain.com/docs/expression_language/how_to/routing

- LlamaIndex Routers: https://docs.llamaindex.ai/en/stable/module_guides/querying/router/

- Semantic Router: https://github.com/aurelio-labs/semantic-router

- OpenAI Model Selection: https://platform.openai.com/docs/guides/model-selection

Conclusion

Query routing is one of the highest-ROI optimizations for LLM applications. Start with simple keyword-based intent classification—it’s fast, interpretable, and handles 80% of cases well. Add embedding-based routing for nuanced queries that don’t match keywords. Use LLM-based classification only for edge cases where you need high accuracy. Map intents to model tiers: simple factual queries go to fast/cheap models, complex reasoning goes to powerful models. For RAG systems, route queries to relevant indexes based on semantic similarity to index descriptions. Implement cost-aware routing that respects per-request and daily budgets, automatically downgrading models when approaching limits. Monitor routing decisions and outcomes to continuously improve your classifiers. A/B test different routing strategies to find the optimal balance between cost and quality. The key insight is that most queries don’t need your most powerful model—intelligent routing lets you reserve expensive compute for queries that actually benefit from it, reducing costs by 60-80% while maintaining user satisfaction.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.