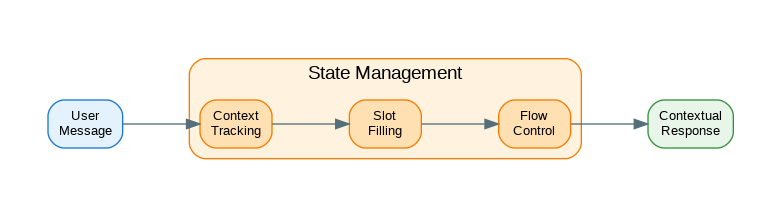

Introduction: Conversational AI applications need to track state across turns—remembering what users said, what information has been collected, and where they are in multi-step workflows. Unlike simple Q&A, task-oriented conversations require slot filling, context tracking, and flow control. This guide covers practical state management patterns: conversation context objects, slot-based information extraction, finite state machines for dialog flow, branching conversations, and persistence strategies that enable conversations to resume across sessions.

Conversation Context

from dataclasses import dataclass, field

from typing import Any, Optional

from datetime import datetime

from enum import Enum

class ConversationPhase(str, Enum):

GREETING = "greeting"

INFORMATION_GATHERING = "information_gathering"

PROCESSING = "processing"

CONFIRMATION = "confirmation"

COMPLETION = "completion"

@dataclass

class ConversationContext:

"""Track conversation state and context."""

session_id: str

user_id: Optional[str] = None

phase: ConversationPhase = ConversationPhase.GREETING

# Collected information

slots: dict[str, Any] = field(default_factory=dict)

# Conversation history

messages: list[dict] = field(default_factory=list)

# Metadata

created_at: datetime = field(default_factory=datetime.now)

updated_at: datetime = field(default_factory=datetime.now)

turn_count: int = 0

# Custom data

metadata: dict = field(default_factory=dict)

def add_message(self, role: str, content: str):

"""Add message to history."""

self.messages.append({

"role": role,

"content": content,

"timestamp": datetime.now().isoformat()

})

self.turn_count += 1

self.updated_at = datetime.now()

def set_slot(self, name: str, value: Any):

"""Set a slot value."""

self.slots[name] = value

self.updated_at = datetime.now()

def get_slot(self, name: str, default: Any = None) -> Any:

"""Get a slot value."""

return self.slots.get(name, default)

def has_slot(self, name: str) -> bool:

"""Check if slot is filled."""

return name in self.slots and self.slots[name] is not None

def clear_slot(self, name: str):

"""Clear a slot."""

if name in self.slots:

del self.slots[name]

def transition_to(self, phase: ConversationPhase):

"""Transition to new phase."""

self.phase = phase

self.updated_at = datetime.now()

def get_recent_messages(self, count: int = 10) -> list[dict]:

"""Get recent messages for context."""

return self.messages[-count:]

def to_dict(self) -> dict:

"""Serialize to dictionary."""

return {

"session_id": self.session_id,

"user_id": self.user_id,

"phase": self.phase.value,

"slots": self.slots,

"messages": self.messages,

"turn_count": self.turn_count,

"metadata": self.metadata

}

@classmethod

def from_dict(cls, data: dict) -> "ConversationContext":

"""Deserialize from dictionary."""

ctx = cls(

session_id=data["session_id"],

user_id=data.get("user_id"),

phase=ConversationPhase(data.get("phase", "greeting")),

turn_count=data.get("turn_count", 0),

metadata=data.get("metadata", {})

)

ctx.slots = data.get("slots", {})

ctx.messages = data.get("messages", [])

return ctx

class ContextManager:

"""Manage conversation contexts."""

def __init__(self):

self.contexts: dict[str, ConversationContext] = {}

def get_or_create(self, session_id: str) -> ConversationContext:

"""Get existing context or create new one."""

if session_id not in self.contexts:

self.contexts[session_id] = ConversationContext(

session_id=session_id

)

return self.contexts[session_id]

def delete(self, session_id: str):

"""Delete a context."""

if session_id in self.contexts:

del self.contexts[session_id]Slot Filling

from dataclasses import dataclass

from typing import Callable, Optional, Any

from enum import Enum

class SlotType(str, Enum):

STRING = "string"

INTEGER = "integer"

FLOAT = "float"

BOOLEAN = "boolean"

DATE = "date"

EMAIL = "email"

PHONE = "phone"

CHOICE = "choice"

@dataclass

class SlotDefinition:

"""Definition of a slot to fill."""

name: str

slot_type: SlotType

prompt: str

required: bool = True

choices: list[str] = None

validator: Callable[[Any], bool] = None

extractor: Callable[[str], Any] = None

class SlotFiller:

"""Extract and validate slot values from user input."""

def __init__(self, client):

self.client = client

self.slots: dict[str, SlotDefinition] = {}

def register_slot(self, slot: SlotDefinition):

"""Register a slot definition."""

self.slots[slot.name] = slot

def extract_slots(

self,

user_input: str,

target_slots: list[str] = None

) -> dict[str, Any]:

"""Extract slot values from user input."""

slots_to_extract = target_slots or list(self.slots.keys())

# Build extraction prompt

slot_descriptions = []

for name in slots_to_extract:

slot = self.slots.get(name)

if slot:

desc = f"- {name} ({slot.slot_type.value}): {slot.prompt}"

if slot.choices:

desc += f" Options: {', '.join(slot.choices)}"

slot_descriptions.append(desc)

prompt = f"""Extract the following information from the user's message.

Return JSON with the slot names as keys. Use null for missing values.

Slots to extract:

{chr(10).join(slot_descriptions)}

User message: {user_input}

JSON response:"""

response = self.client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

response_format={"type": "json_object"}

)

import json

extracted = json.loads(response.choices[0].message.content)

# Validate extracted values

validated = {}

for name, value in extracted.items():

if value is not None and name in self.slots:

slot = self.slots[name]

# Type conversion

value = self._convert_type(value, slot.slot_type)

# Custom validation

if slot.validator and not slot.validator(value):

continue

# Choice validation

if slot.choices and value not in slot.choices:

continue

validated[name] = value

return validated

def _convert_type(self, value: Any, slot_type: SlotType) -> Any:

"""Convert value to slot type."""

try:

if slot_type == SlotType.INTEGER:

return int(value)

elif slot_type == SlotType.FLOAT:

return float(value)

elif slot_type == SlotType.BOOLEAN:

if isinstance(value, bool):

return value

return str(value).lower() in ("true", "yes", "1")

else:

return str(value)

except:

return value

def get_missing_slots(

self,

context: ConversationContext,

required_only: bool = True

) -> list[SlotDefinition]:

"""Get slots that still need to be filled."""

missing = []

for name, slot in self.slots.items():

if required_only and not slot.required:

continue

if not context.has_slot(name):

missing.append(slot)

return missing

def get_next_prompt(

self,

context: ConversationContext

) -> Optional[str]:

"""Get prompt for next missing slot."""

missing = self.get_missing_slots(context)

if missing:

return missing[0].prompt

return None

# Form-based slot filling

class FormFiller:

"""Fill a form through conversation."""

def __init__(self, client, slots: list[SlotDefinition]):

self.client = client

self.slot_filler = SlotFiller(client)

for slot in slots:

self.slot_filler.register_slot(slot)

self.slot_order = [s.name for s in slots]

def process_input(

self,

context: ConversationContext,

user_input: str

) -> dict:

"""Process user input and update context."""

# Extract slots from input

extracted = self.slot_filler.extract_slots(user_input)

# Update context

for name, value in extracted.items():

context.set_slot(name, value)

# Check completion

missing = self.slot_filler.get_missing_slots(context)

if not missing:

return {

"complete": True,

"slots": context.slots

}

# Get next prompt

next_prompt = missing[0].prompt

return {

"complete": False,

"next_prompt": next_prompt,

"missing_slots": [s.name for s in missing],

"filled_slots": list(context.slots.keys())

}Dialog Flow Control

from dataclasses import dataclass

from typing import Callable, Optional

from enum import Enum

@dataclass

class DialogState:

"""A state in the dialog flow."""

name: str

entry_action: Callable[[ConversationContext], str] = None

transitions: dict[str, str] = None # condition -> next_state

class DialogFlow:

"""Finite state machine for dialog control."""

def __init__(self):

self.states: dict[str, DialogState] = {}

self.initial_state: str = None

def add_state(

self,

name: str,

entry_action: Callable[[ConversationContext], str] = None,

transitions: dict[str, str] = None,

is_initial: bool = False

):

"""Add a state to the flow."""

self.states[name] = DialogState(

name=name,

entry_action=entry_action,

transitions=transitions or {}

)

if is_initial:

self.initial_state = name

def get_current_state(self, context: ConversationContext) -> DialogState:

"""Get current state from context."""

state_name = context.metadata.get("dialog_state", self.initial_state)

return self.states.get(state_name)

def transition(

self,

context: ConversationContext,

condition: str

) -> Optional[str]:

"""Transition to next state based on condition."""

current = self.get_current_state(context)

if not current:

return None

next_state_name = current.transitions.get(condition)

if next_state_name and next_state_name in self.states:

context.metadata["dialog_state"] = next_state_name

next_state = self.states[next_state_name]

if next_state.entry_action:

return next_state.entry_action(context)

return None

def process(

self,

context: ConversationContext,

user_input: str

) -> str:

"""Process user input and return response."""

current = self.get_current_state(context)

if not current:

context.metadata["dialog_state"] = self.initial_state

current = self.states[self.initial_state]

# Determine condition from input

condition = self._classify_input(user_input, current)

# Try to transition

response = self.transition(context, condition)

if response:

return response

# Stay in current state

if current.entry_action:

return current.entry_action(context)

return "I'm not sure how to proceed. Can you rephrase?"

def _classify_input(

self,

user_input: str,

current_state: DialogState

) -> str:

"""Classify user input to determine transition."""

# Simple keyword matching

input_lower = user_input.lower()

if any(w in input_lower for w in ["yes", "correct", "right", "confirm"]):

return "confirm"

if any(w in input_lower for w in ["no", "wrong", "cancel", "stop"]):

return "cancel"

if any(w in input_lower for w in ["help", "what", "how"]):

return "help"

return "input"

# Example: Booking flow

def create_booking_flow(client) -> DialogFlow:

"""Create a booking dialog flow."""

flow = DialogFlow()

# Greeting state

flow.add_state(

name="greeting",

entry_action=lambda ctx: "Hello! I can help you book an appointment. What service do you need?",

transitions={

"input": "collect_service"

},

is_initial=True

)

# Collect service

flow.add_state(

name="collect_service",

entry_action=lambda ctx: "What date works best for you?",

transitions={

"input": "collect_date"

}

)

# Collect date

flow.add_state(

name="collect_date",

entry_action=lambda ctx: "What time would you prefer?",

transitions={

"input": "collect_time"

}

)

# Collect time

flow.add_state(

name="collect_time",

entry_action=lambda ctx: f"Great! Let me confirm: {ctx.slots}. Is this correct?",

transitions={

"confirm": "confirmed",

"cancel": "greeting"

}

)

# Confirmed

flow.add_state(

name="confirmed",

entry_action=lambda ctx: "Your appointment is booked! Is there anything else?",

transitions={

"input": "greeting",

"cancel": "goodbye"

}

)

# Goodbye

flow.add_state(

name="goodbye",

entry_action=lambda ctx: "Thank you! Have a great day!",

transitions={}

)

return flowBranching Conversations

from dataclasses import dataclass

from typing import Callable, Optional

@dataclass

class ConversationBranch:

"""A branch in the conversation tree."""

name: str

condition: Callable[[ConversationContext, str], bool]

handler: Callable[[ConversationContext, str], str]

priority: int = 0

class BranchingConversation:

"""Handle branching conversation flows."""

def __init__(self, client):

self.client = client

self.branches: list[ConversationBranch] = []

self.default_handler: Callable = None

def add_branch(

self,

name: str,

condition: Callable[[ConversationContext, str], bool],

handler: Callable[[ConversationContext, str], str],

priority: int = 0

):

"""Add a conversation branch."""

self.branches.append(ConversationBranch(

name=name,

condition=condition,

handler=handler,

priority=priority

))

# Sort by priority

self.branches.sort(key=lambda b: b.priority, reverse=True)

def set_default(self, handler: Callable[[ConversationContext, str], str]):

"""Set default handler."""

self.default_handler = handler

def process(

self,

context: ConversationContext,

user_input: str

) -> str:

"""Process input through branches."""

for branch in self.branches:

if branch.condition(context, user_input):

context.metadata["current_branch"] = branch.name

return branch.handler(context, user_input)

if self.default_handler:

return self.default_handler(context, user_input)

return "I'm not sure how to help with that."

# Intent-based branching

class IntentRouter:

"""Route conversations based on intent."""

def __init__(self, client):

self.client = client

self.intent_handlers: dict[str, Callable] = {}

def register_intent(

self,

intent: str,

handler: Callable[[ConversationContext, str], str]

):

"""Register handler for intent."""

self.intent_handlers[intent] = handler

def classify_intent(self, user_input: str) -> str:

"""Classify user intent using LLM."""

intents = list(self.intent_handlers.keys())

prompt = f"""Classify the user's intent into one of these categories:

{', '.join(intents)}

User message: {user_input}

Intent (just the category name):"""

response = self.client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

max_tokens=20

)

intent = response.choices[0].message.content.strip().lower()

# Find matching intent

for registered in intents:

if registered.lower() in intent:

return registered

return "unknown"

def process(

self,

context: ConversationContext,

user_input: str

) -> str:

"""Process input based on intent."""

intent = self.classify_intent(user_input)

context.metadata["last_intent"] = intent

handler = self.intent_handlers.get(intent)

if handler:

return handler(context, user_input)

return "I'm not sure how to help with that. Can you rephrase?"

# Sub-conversation handling

class SubConversation:

"""Handle nested sub-conversations."""

def __init__(self, name: str, handler: Callable):

self.name = name

self.handler = handler

self.is_active = False

def start(self, context: ConversationContext) -> str:

"""Start sub-conversation."""

self.is_active = True

context.metadata["sub_conversation"] = self.name

return self.handler(context, "__start__")

def process(self, context: ConversationContext, user_input: str) -> str:

"""Process input in sub-conversation."""

result = self.handler(context, user_input)

if result == "__end__":

self.end(context)

return None

return result

def end(self, context: ConversationContext):

"""End sub-conversation."""

self.is_active = False

context.metadata.pop("sub_conversation", None)State Persistence

from abc import ABC, abstractmethod

from typing import Optional

import json

class StateStore(ABC):

"""Abstract state storage."""

@abstractmethod

def save(self, session_id: str, context: ConversationContext):

pass

@abstractmethod

def load(self, session_id: str) -> Optional[ConversationContext]:

pass

@abstractmethod

def delete(self, session_id: str):

pass

class InMemoryStore(StateStore):

"""In-memory state storage."""

def __init__(self):

self.store: dict[str, dict] = {}

def save(self, session_id: str, context: ConversationContext):

self.store[session_id] = context.to_dict()

def load(self, session_id: str) -> Optional[ConversationContext]:

data = self.store.get(session_id)

if data:

return ConversationContext.from_dict(data)

return None

def delete(self, session_id: str):

self.store.pop(session_id, None)

class RedisStore(StateStore):

"""Redis-based state storage."""

def __init__(self, redis_url: str, ttl: int = 3600):

import redis

self.client = redis.from_url(redis_url)

self.ttl = ttl

def save(self, session_id: str, context: ConversationContext):

key = f"conversation:{session_id}"

data = json.dumps(context.to_dict())

self.client.setex(key, self.ttl, data)

def load(self, session_id: str) -> Optional[ConversationContext]:

key = f"conversation:{session_id}"

data = self.client.get(key)

if data:

return ConversationContext.from_dict(json.loads(data))

return None

def delete(self, session_id: str):

key = f"conversation:{session_id}"

self.client.delete(key)

class FileStore(StateStore):

"""File-based state storage."""

def __init__(self, directory: str):

import os

self.directory = directory

os.makedirs(directory, exist_ok=True)

def _get_path(self, session_id: str) -> str:

return f"{self.directory}/{session_id}.json"

def save(self, session_id: str, context: ConversationContext):

path = self._get_path(session_id)

with open(path, 'w') as f:

json.dump(context.to_dict(), f)

def load(self, session_id: str) -> Optional[ConversationContext]:

import os

path = self._get_path(session_id)

if os.path.exists(path):

with open(path, 'r') as f:

return ConversationContext.from_dict(json.load(f))

return None

def delete(self, session_id: str):

import os

path = self._get_path(session_id)

if os.path.exists(path):

os.remove(path)

# Conversation manager with persistence

class PersistentConversationManager:

"""Manage conversations with persistence."""

def __init__(self, store: StateStore):

self.store = store

def get_context(self, session_id: str) -> ConversationContext:

"""Get or create conversation context."""

context = self.store.load(session_id)

if not context:

context = ConversationContext(session_id=session_id)

return context

def save_context(self, context: ConversationContext):

"""Save conversation context."""

self.store.save(context.session_id, context)

def end_conversation(self, session_id: str):

"""End and delete conversation."""

self.store.delete(session_id)Production Conversation Service

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Optional

import uuid

app = FastAPI()

# Initialize components

from openai import OpenAI

client = OpenAI()

store = InMemoryStore()

manager = PersistentConversationManager(store)

# Setup slot filler

slot_filler = SlotFiller(client)

slot_filler.register_slot(SlotDefinition(

name="name",

slot_type=SlotType.STRING,

prompt="What's your name?"

))

slot_filler.register_slot(SlotDefinition(

name="email",

slot_type=SlotType.EMAIL,

prompt="What's your email address?"

))

slot_filler.register_slot(SlotDefinition(

name="service",

slot_type=SlotType.CHOICE,

prompt="What service do you need?",

choices=["consultation", "support", "sales"]

))

class ChatRequest(BaseModel):

session_id: Optional[str] = None

message: str

@app.post("/v1/chat")

async def chat(request: ChatRequest):

"""Process chat message with state management."""

# Get or create session

session_id = request.session_id or str(uuid.uuid4())

context = manager.get_context(session_id)

# Add user message

context.add_message("user", request.message)

# Extract slots

extracted = slot_filler.extract_slots(request.message)

for name, value in extracted.items():

context.set_slot(name, value)

# Check for missing slots

missing = slot_filler.get_missing_slots(context)

if missing:

response = missing[0].prompt

else:

# All slots filled - generate response

response = await generate_response(context, request.message)

# Add assistant message

context.add_message("assistant", response)

# Save context

manager.save_context(context)

return {

"session_id": session_id,

"response": response,

"slots": context.slots,

"phase": context.phase.value

}

async def generate_response(context: ConversationContext, user_input: str) -> str:

"""Generate contextual response."""

# Build context for LLM

system_prompt = f"""You are a helpful assistant.

User information: {context.slots}

Conversation phase: {context.phase.value}"""

messages = [{"role": "system", "content": system_prompt}]

messages.extend(context.get_recent_messages(10))

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=messages

)

return response.choices[0].message.content

@app.get("/v1/session/{session_id}")

async def get_session(session_id: str):

"""Get session state."""

context = manager.get_context(session_id)

return {

"session_id": session_id,

"slots": context.slots,

"phase": context.phase.value,

"turn_count": context.turn_count

}

@app.delete("/v1/session/{session_id}")

async def end_session(session_id: str):

"""End session."""

manager.end_conversation(session_id)

return {"deleted": True}

@app.get("/health")

async def health():

return {"status": "healthy"}References

- Rasa Dialog Management: https://rasa.com/docs/rasa/dialogue-policies

- Dialogflow CX: https://cloud.google.com/dialogflow/cx/docs

- LangChain Conversation: https://python.langchain.com/docs/modules/memory/

- Finite State Machines: https://en.wikipedia.org/wiki/Finite-state_machine

Conclusion

Effective conversation state management transforms chatbots from simple Q&A systems into capable task-oriented assistants. Use conversation context objects to track all relevant information across turns. Implement slot filling for structured information gathering—define slots with types, validators, and prompts. Use dialog flows (finite state machines) for predictable multi-step processes. Add branching logic for handling different user intents and conversation paths. Persist state to enable conversations that resume across sessions. The key is balancing structure with flexibility—enough state tracking to maintain context, but enough LLM involvement to handle unexpected inputs gracefully.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.