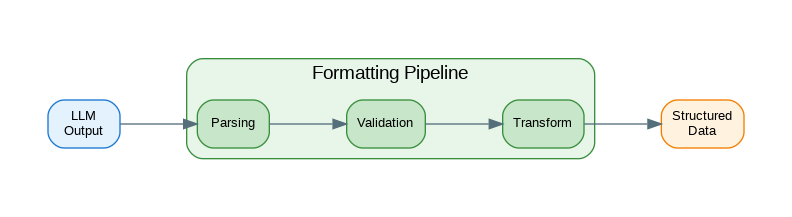

Introduction: LLM outputs are inherently unstructured text, but applications need structured data—JSON objects, typed responses, specific formats. Getting reliable structured output requires careful prompt engineering, output parsing, validation, and error recovery. This guide covers practical output formatting techniques: JSON mode and structured outputs, Pydantic-based parsing, format enforcement with retries, template-based formatting, and strategies for handling malformed outputs gracefully.

JSON Mode and Structured Outputs

from openai import OpenAI

from pydantic import BaseModel, Field

from typing import Optional

import json

client = OpenAI()

# Basic JSON mode

def get_json_response(prompt: str) -> dict:

"""Get JSON response using JSON mode."""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "You are a helpful assistant that responds in JSON format."

},

{"role": "user", "content": prompt}

],

response_format={"type": "json_object"}

)

return json.loads(response.choices[0].message.content)

# Structured outputs with schema

class ProductReview(BaseModel):

"""Schema for product review analysis."""

sentiment: str = Field(description="positive, negative, or neutral")

score: float = Field(description="Sentiment score from 0 to 1")

key_points: list[str] = Field(description="Main points from the review")

recommendation: bool = Field(description="Whether reviewer recommends product")

def analyze_review_structured(review_text: str) -> ProductReview:

"""Analyze review using structured outputs."""

response = client.beta.chat.completions.parse(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "Analyze the product review and extract structured information."

},

{"role": "user", "content": review_text}

],

response_format=ProductReview

)

return response.choices[0].message.parsed

# Complex nested schema

class Address(BaseModel):

street: str

city: str

state: str

zip_code: str

country: str = "USA"

class Person(BaseModel):

name: str

email: Optional[str] = None

phone: Optional[str] = None

address: Optional[Address] = None

class ExtractedEntities(BaseModel):

"""Extracted entities from text."""

people: list[Person] = Field(default_factory=list)

organizations: list[str] = Field(default_factory=list)

dates: list[str] = Field(default_factory=list)

locations: list[str] = Field(default_factory=list)

def extract_entities(text: str) -> ExtractedEntities:

"""Extract entities using structured output."""

response = client.beta.chat.completions.parse(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "Extract all entities from the text."

},

{"role": "user", "content": text}

],

response_format=ExtractedEntities

)

return response.choices[0].message.parsed

# Enum constraints

from enum import Enum

class Priority(str, Enum):

LOW = "low"

MEDIUM = "medium"

HIGH = "high"

CRITICAL = "critical"

class TaskClassification(BaseModel):

"""Task classification result."""

category: str

priority: Priority

estimated_hours: float

requires_approval: bool

tags: list[str]

def classify_task(task_description: str) -> TaskClassification:

"""Classify task with enum constraints."""

response = client.beta.chat.completions.parse(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "Classify the task and estimate effort."

},

{"role": "user", "content": task_description}

],

response_format=TaskClassification

)

return response.choices[0].message.parsedPydantic-Based Parsing

from pydantic import BaseModel, Field, validator, field_validator

from typing import Any, Optional

import json

import re

class OutputParser:

"""Parse LLM outputs into Pydantic models."""

def __init__(self, model: type[BaseModel]):

self.model = model

def parse(self, text: str) -> BaseModel:

"""Parse text into model."""

# Try to extract JSON from text

json_str = self._extract_json(text)

if json_str:

data = json.loads(json_str)

return self.model.model_validate(data)

raise ValueError(f"Could not parse output: {text[:100]}")

def _extract_json(self, text: str) -> Optional[str]:

"""Extract JSON from text that may contain other content."""

# Try direct parse first

try:

json.loads(text)

return text

except:

pass

# Look for JSON in code blocks

code_block_pattern = r"```(?:json)?\s*([\s\S]*?)```"

matches = re.findall(code_block_pattern, text)

for match in matches:

try:

json.loads(match.strip())

return match.strip()

except:

continue

# Look for JSON objects

brace_pattern = r"\{[\s\S]*\}"

matches = re.findall(brace_pattern, text)

for match in matches:

try:

json.loads(match)

return match

except:

continue

return None

def get_format_instructions(self) -> str:

"""Get format instructions for the model."""

schema = self.model.model_json_schema()

return f"""Respond with a JSON object matching this schema:

{json.dumps(schema, indent=2)}

Important: Return ONLY the JSON object, no additional text."""

# Validated models

class ValidatedResponse(BaseModel):

"""Response with custom validation."""

answer: str = Field(min_length=10)

confidence: float = Field(ge=0.0, le=1.0)

sources: list[str] = Field(min_length=1)

@field_validator('sources')

@classmethod

def validate_sources(cls, v):

"""Ensure sources are URLs or valid references."""

for source in v:

if not (source.startswith('http') or len(source) > 5):

raise ValueError(f"Invalid source: {source}")

return v

class DateRange(BaseModel):

"""Date range with validation."""

start_date: str

end_date: str

@field_validator('start_date', 'end_date')

@classmethod

def validate_date_format(cls, v):

"""Validate date format."""

from datetime import datetime

try:

datetime.strptime(v, "%Y-%m-%d")

except ValueError:

raise ValueError(f"Date must be YYYY-MM-DD format: {v}")

return v

# Flexible parsing with defaults

class FlexibleParser:

"""Parser that handles partial/malformed outputs."""

def __init__(self, model: type[BaseModel]):

self.model = model

def parse_with_defaults(

self,

text: str,

defaults: dict = None

) -> BaseModel:

"""Parse with fallback to defaults."""

defaults = defaults or {}

try:

# Try normal parsing

json_str = self._extract_json(text)

if json_str:

data = json.loads(json_str)

# Merge with defaults

merged = {**defaults, **data}

return self.model.model_validate(merged)

except Exception as e:

pass

# Return model with defaults

return self.model.model_validate(defaults)

def _extract_json(self, text: str) -> Optional[str]:

"""Extract JSON from text."""

# Same as OutputParser._extract_json

try:

json.loads(text)

return text

except:

pass

brace_pattern = r"\{[\s\S]*\}"

matches = re.findall(brace_pattern, text)

for match in matches:

try:

json.loads(match)

return match

except:

continue

return NoneFormat Enforcement with Retries

from dataclasses import dataclass

from typing import TypeVar, Generic

T = TypeVar('T', bound=BaseModel)

@dataclass

class ParseResult(Generic[T]):

"""Result of parsing attempt."""

success: bool

result: Optional[T] = None

error: Optional[str] = None

attempts: int = 0

class RetryingParser(Generic[T]):

"""Parser with automatic retry on failure."""

def __init__(

self,

client,

model_class: type[T],

max_retries: int = 3

):

self.client = client

self.model_class = model_class

self.max_retries = max_retries

self.parser = OutputParser(model_class)

def parse_with_retry(

self,

prompt: str,

system_prompt: str = None

) -> ParseResult[T]:

"""Parse with automatic retry on failure."""

messages = []

if system_prompt:

messages.append({"role": "system", "content": system_prompt})

# Add format instructions

format_instructions = self.parser.get_format_instructions()

full_prompt = f"{prompt}\n\n{format_instructions}"

messages.append({"role": "user", "content": full_prompt})

last_error = None

for attempt in range(self.max_retries):

try:

response = self.client.chat.completions.create(

model="gpt-4o-mini",

messages=messages,

response_format={"type": "json_object"}

)

content = response.choices[0].message.content

result = self.parser.parse(content)

return ParseResult(

success=True,

result=result,

attempts=attempt + 1

)

except Exception as e:

last_error = str(e)

# Add error feedback for retry

messages.append({

"role": "assistant",

"content": content if 'content' in dir() else ""

})

messages.append({

"role": "user",

"content": f"That response had an error: {last_error}. Please try again with valid JSON matching the schema."

})

return ParseResult(

success=False,

error=last_error,

attempts=self.max_retries

)

# Self-correcting parser

class SelfCorrectingParser(Generic[T]):

"""Parser that uses LLM to fix malformed outputs."""

def __init__(self, client, model_class: type[T]):

self.client = client

self.model_class = model_class

def parse_and_correct(self, text: str) -> T:

"""Parse with LLM-assisted correction."""

# Try direct parsing first

try:

data = json.loads(text)

return self.model_class.model_validate(data)

except Exception as initial_error:

pass

# Ask LLM to fix the output

schema = self.model_class.model_json_schema()

correction_prompt = f"""The following text should be valid JSON matching this schema:

{json.dumps(schema, indent=2)}

Text to fix:

{text}

Return ONLY the corrected JSON, no explanation."""

response = self.client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": correction_prompt}],

response_format={"type": "json_object"}

)

corrected = response.choices[0].message.content

data = json.loads(corrected)

return self.model_class.model_validate(data)

# Streaming parser

class StreamingParser:

"""Parse structured output from streaming response."""

def __init__(self, model_class: type[BaseModel]):

self.model_class = model_class

self.buffer = ""

def feed(self, chunk: str) -> Optional[BaseModel]:

"""Feed chunk and try to parse."""

self.buffer += chunk

# Try to parse complete JSON

try:

# Look for complete JSON object

if self.buffer.count('{') == self.buffer.count('}'):

data = json.loads(self.buffer)

return self.model_class.model_validate(data)

except:

pass

return None

def reset(self):

"""Reset buffer."""

self.buffer = ""Template-Based Formatting

from dataclasses import dataclass

from typing import Callable

import re

@dataclass

class OutputTemplate:

"""Template for structured output."""

name: str

template: str

parser: Callable[[str], dict]

class TemplateFormatter:

"""Format outputs using templates."""

def __init__(self):

self.templates: dict[str, OutputTemplate] = {}

def register_template(

self,

name: str,

template: str,

parser: Callable[[str], dict] = None

):

"""Register an output template."""

self.templates[name] = OutputTemplate(

name=name,

template=template,

parser=parser or self._default_parser

)

def get_format_prompt(self, template_name: str) -> str:

"""Get format instructions for template."""

template = self.templates.get(template_name)

if not template:

raise ValueError(f"Unknown template: {template_name}")

return f"""Format your response exactly like this template:

{template.template}

Fill in the bracketed sections with appropriate content."""

def parse_output(self, template_name: str, output: str) -> dict:

"""Parse output using template."""

template = self.templates.get(template_name)

if not template:

raise ValueError(f"Unknown template: {template_name}")

return template.parser(output)

def _default_parser(self, output: str) -> dict:

"""Default parser extracts key-value pairs."""

result = {}

# Look for "Key: Value" patterns

pattern = r"^([A-Za-z_]+):\s*(.+)$"

for line in output.split('\n'):

match = re.match(pattern, line.strip())

if match:

key = match.group(1).lower()

value = match.group(2).strip()

result[key] = value

return result

# Common templates

def setup_common_templates(formatter: TemplateFormatter):

"""Setup common output templates."""

# Summary template

formatter.register_template(

name="summary",

template="""Summary: [Brief summary in 2-3 sentences]

Key Points:

- [Point 1]

- [Point 2]

- [Point 3]

Conclusion: [Final takeaway]""",

parser=lambda x: parse_summary(x)

)

# Analysis template

formatter.register_template(

name="analysis",

template="""Topic: [Main topic]

Analysis:

[Detailed analysis paragraph]

Pros:

- [Pro 1]

- [Pro 2]

Cons:

- [Con 1]

- [Con 2]

Recommendation: [Your recommendation]""",

parser=lambda x: parse_analysis(x)

)

# Code review template

formatter.register_template(

name="code_review",

template="""Overall Assessment: [Good/Needs Work/Poor]

Issues Found:

1. [Issue description] - Severity: [High/Medium/Low]

2. [Issue description] - Severity: [High/Medium/Low]

Suggestions:

- [Suggestion 1]

- [Suggestion 2]

Code Quality Score: [1-10]""",

parser=lambda x: parse_code_review(x)

)

def parse_summary(output: str) -> dict:

"""Parse summary template output."""

result = {"key_points": []}

lines = output.split('\n')

current_section = None

for line in lines:

line = line.strip()

if line.startswith("Summary:"):

result["summary"] = line.replace("Summary:", "").strip()

elif line.startswith("Conclusion:"):

result["conclusion"] = line.replace("Conclusion:", "").strip()

elif line.startswith("Key Points:"):

current_section = "key_points"

elif line.startswith("- ") and current_section == "key_points":

result["key_points"].append(line[2:])

return result

def parse_analysis(output: str) -> dict:

"""Parse analysis template output."""

result = {"pros": [], "cons": []}

lines = output.split('\n')

current_section = None

for line in lines:

line = line.strip()

if line.startswith("Topic:"):

result["topic"] = line.replace("Topic:", "").strip()

elif line.startswith("Recommendation:"):

result["recommendation"] = line.replace("Recommendation:", "").strip()

elif line == "Pros:":

current_section = "pros"

elif line == "Cons:":

current_section = "cons"

elif line.startswith("- "):

if current_section in result:

result[current_section].append(line[2:])

return result

def parse_code_review(output: str) -> dict:

"""Parse code review template output."""

result = {"issues": [], "suggestions": []}

lines = output.split('\n')

current_section = None

for line in lines:

line = line.strip()

if line.startswith("Overall Assessment:"):

result["assessment"] = line.replace("Overall Assessment:", "").strip()

elif line.startswith("Code Quality Score:"):

score_str = line.replace("Code Quality Score:", "").strip()

try:

result["score"] = int(score_str.split('/')[0])

except:

result["score"] = None

elif "Issues Found:" in line:

current_section = "issues"

elif "Suggestions:" in line:

current_section = "suggestions"

elif line.startswith("- ") and current_section == "suggestions":

result["suggestions"].append(line[2:])

elif re.match(r"^\d+\.", line) and current_section == "issues":

result["issues"].append(line)Production Output Service

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Any, Optional

app = FastAPI()

# Initialize components

from openai import OpenAI

client = OpenAI()

# Define output schemas

class SentimentResult(BaseModel):

sentiment: str

confidence: float

explanation: str

class SummaryResult(BaseModel):

summary: str

key_points: list[str]

word_count: int

class ExtractionResult(BaseModel):

entities: list[dict]

relationships: list[dict]

# Initialize parsers

sentiment_parser = RetryingParser(client, SentimentResult)

summary_parser = RetryingParser(client, SummaryResult)

class AnalyzeRequest(BaseModel):

text: str

output_type: str # sentiment, summary, extraction

schema: Optional[dict] = None

@app.post("/v1/analyze")

async def analyze_text(request: AnalyzeRequest):

"""Analyze text with structured output."""

if request.output_type == "sentiment":

result = sentiment_parser.parse_with_retry(

f"Analyze the sentiment of this text:\n\n{request.text}",

system_prompt="You are a sentiment analysis expert."

)

if result.success:

return {

"type": "sentiment",

"result": result.result.model_dump(),

"attempts": result.attempts

}

else:

raise HTTPException(500, f"Parsing failed: {result.error}")

elif request.output_type == "summary":

result = summary_parser.parse_with_retry(

f"Summarize this text:\n\n{request.text}",

system_prompt="You are a summarization expert."

)

if result.success:

return {

"type": "summary",

"result": result.result.model_dump(),

"attempts": result.attempts

}

else:

raise HTTPException(500, f"Parsing failed: {result.error}")

elif request.output_type == "extraction":

# Use structured outputs for custom schema

response = client.beta.chat.completions.parse(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "Extract entities and relationships from text."

},

{"role": "user", "content": request.text}

],

response_format=ExtractionResult

)

return {

"type": "extraction",

"result": response.choices[0].message.parsed.model_dump()

}

else:

raise HTTPException(400, f"Unknown output type: {request.output_type}")

class FormatRequest(BaseModel):

text: str

template: str

# Template formatter

formatter = TemplateFormatter()

setup_common_templates(formatter)

@app.post("/v1/format")

async def format_output(request: FormatRequest):

"""Format text using template."""

try:

format_prompt = formatter.get_format_prompt(request.template)

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": format_prompt},

{"role": "user", "content": request.text}

]

)

output = response.choices[0].message.content

parsed = formatter.parse_output(request.template, output)

return {

"template": request.template,

"raw_output": output,

"parsed": parsed

}

except ValueError as e:

raise HTTPException(400, str(e))

@app.get("/v1/templates")

async def list_templates():

"""List available templates."""

return {

"templates": list(formatter.templates.keys())

}

@app.get("/health")

async def health():

return {"status": "healthy"}References

- OpenAI Structured Outputs: https://platform.openai.com/docs/guides/structured-outputs

- Pydantic: https://docs.pydantic.dev/

- LangChain Output Parsers: https://python.langchain.com/docs/modules/model_io/output_parsers/

- Instructor Library: https://python.useinstructor.com/

Conclusion

Reliable structured output is essential for production LLM applications. Use JSON mode or structured outputs when available—they significantly reduce parsing failures. Define Pydantic models with validation rules to catch malformed data early. Implement retry logic with error feedback to handle occasional failures gracefully. Use template-based formatting for consistent output structure when JSON isn’t appropriate. For streaming applications, build incremental parsers that can handle partial outputs. The key is defense in depth: clear format instructions, native JSON mode, robust parsing, validation, and graceful error handling. Well-structured outputs make LLM responses predictable and integrable with downstream systems.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.