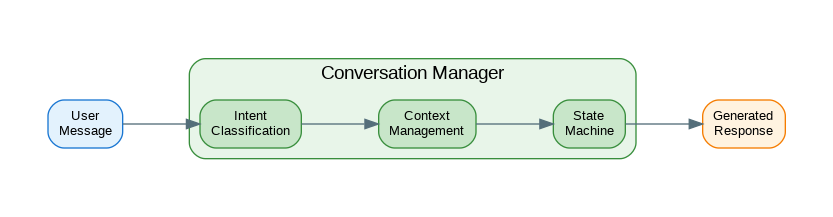

Introduction: Effective conversational AI requires more than just calling an LLM—it needs thoughtful conversation design. This includes managing multi-turn context, handling user intent, graceful error recovery, and maintaining consistent personality. This guide covers essential conversation patterns: intent classification and routing, slot filling for structured data collection, conversation state machines, context window management, and building chatbots that feel natural and helpful. These patterns transform basic LLM interactions into polished conversational experiences.

Intent Classification

from openai import OpenAI

from pydantic import BaseModel

from typing import Optional

from enum import Enum

import json

client = OpenAI()

class Intent(str, Enum):

GREETING = "greeting"

QUESTION = "question"

COMPLAINT = "complaint"

PURCHASE = "purchase"

SUPPORT = "support"

FEEDBACK = "feedback"

GOODBYE = "goodbye"

UNKNOWN = "unknown"

class IntentResult(BaseModel):

intent: Intent

confidence: float

entities: dict = {}

def classify_intent(message: str, context: list[dict] = None) -> IntentResult:

"""Classify user intent from message."""

context_str = ""

if context:

recent = context[-3:] # Last 3 turns

context_str = "\n".join([f"{m['role']}: {m['content']}" for m in recent])

prompt = f"""Classify the user's intent from their message.

Available intents:

- greeting: Hello, hi, good morning, etc.

- question: Asking for information

- complaint: Expressing dissatisfaction

- purchase: Wanting to buy something

- support: Technical help or troubleshooting

- feedback: Providing opinions or suggestions

- goodbye: Ending conversation

- unknown: Doesn't fit other categories

{f"Recent conversation context:{chr(10)}{context_str}" if context_str else ""}

User message: {message}

Return JSON:

{{"intent": "intent_name", "confidence": 0.0-1.0, "entities": {{"key": "value"}}}}

Extract relevant entities like product names, issue types, etc."""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

response_format={"type": "json_object"}

)

data = json.loads(response.choices[0].message.content)

return IntentResult(

intent=Intent(data.get("intent", "unknown")),

confidence=data.get("confidence", 0.5),

entities=data.get("entities", {})

)

# Usage

result = classify_intent("I've been waiting for my order for 2 weeks!")

print(f"Intent: {result.intent}, Confidence: {result.confidence}")

print(f"Entities: {result.entities}")Slot Filling

from dataclasses import dataclass, field

from typing import Any, Callable

@dataclass

class Slot:

name: str

description: str

required: bool = True

value: Any = None

validator: Callable[[Any], bool] = None

prompt: str = None

@dataclass

class SlotFillingState:

slots: dict[str, Slot] = field(default_factory=dict)

current_slot: str = None

completed: bool = False

class SlotFiller:

"""Fill slots through conversation."""

def __init__(self, slots: list[Slot]):

self.state = SlotFillingState(

slots={s.name: s for s in slots}

)

def extract_slot_values(self, message: str) -> dict[str, Any]:

"""Extract slot values from user message."""

unfilled = [s for s in self.state.slots.values() if s.value is None]

if not unfilled:

return {}

slot_descriptions = "\n".join([

f"- {s.name}: {s.description}"

for s in unfilled

])

prompt = f"""Extract values for these slots from the user message.

Slots to fill:

{slot_descriptions}

User message: {message}

Return JSON with slot names as keys and extracted values (or null if not found):

{{"slot_name": "value_or_null"}}"""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

response_format={"type": "json_object"}

)

return json.loads(response.choices[0].message.content)

def process_message(self, message: str) -> str:

"""Process user message and return next prompt."""

# Extract values

extracted = self.extract_slot_values(message)

# Update slots

for name, value in extracted.items():

if name in self.state.slots and value is not None:

slot = self.state.slots[name]

# Validate if validator exists

if slot.validator and not slot.validator(value):

return f"Invalid value for {name}. {slot.prompt or f'Please provide a valid {name}.'}"

slot.value = value

# Find next unfilled required slot

for slot in self.state.slots.values():

if slot.required and slot.value is None:

self.state.current_slot = slot.name

return slot.prompt or f"What is your {slot.name}?"

# All required slots filled

self.state.completed = True

return None

def get_filled_slots(self) -> dict[str, Any]:

"""Get all filled slot values."""

return {

name: slot.value

for name, slot in self.state.slots.items()

if slot.value is not None

}

# Usage - Booking system

def validate_email(email: str) -> bool:

return "@" in email and "." in email

def validate_date(date: str) -> bool:

import re

return bool(re.match(r'\d{4}-\d{2}-\d{2}', date))

slots = [

Slot(

name="name",

description="Customer's full name",

prompt="What is your full name?"

),

Slot(

name="email",

description="Customer's email address",

validator=validate_email,

prompt="What is your email address?"

),

Slot(

name="date",

description="Preferred appointment date (YYYY-MM-DD)",

validator=validate_date,

prompt="What date would you like to book? (Please use YYYY-MM-DD format)"

),

Slot(

name="notes",

description="Any additional notes or requirements",

required=False,

prompt="Any special requirements? (optional)"

)

]

filler = SlotFiller(slots)

# Simulate conversation

messages = [

"I'd like to book an appointment",

"John Smith",

"john@example.com",

"2024-03-15",

]

for msg in messages:

response = filler.process_message(msg)

if response:

print(f"Bot: {response}")

if filler.state.completed:

print(f"Booking complete: {filler.get_filled_slots()}")Conversation State Machine

from enum import Enum, auto

from typing import Callable

class ConversationState(Enum):

GREETING = auto()

IDENTIFYING_NEED = auto()

COLLECTING_INFO = auto()

PROCESSING = auto()

CONFIRMING = auto()

COMPLETED = auto()

ERROR = auto()

@dataclass

class Transition:

from_state: ConversationState

to_state: ConversationState

condition: Callable[[dict], bool]

action: Callable[[dict], str] = None

class ConversationStateMachine:

"""Manage conversation flow with state machine."""

def __init__(self):

self.state = ConversationState.GREETING

self.context: dict = {}

self.transitions: list[Transition] = []

self.state_handlers: dict[ConversationState, Callable] = {}

def add_transition(self, transition: Transition):

"""Add a state transition."""

self.transitions.append(transition)

def set_handler(self, state: ConversationState, handler: Callable[[str, dict], str]):

"""Set handler for a state."""

self.state_handlers[state] = handler

def process(self, user_input: str) -> str:

"""Process user input and return response."""

# Run current state handler

handler = self.state_handlers.get(self.state)

if handler:

response = handler(user_input, self.context)

else:

response = "I'm not sure how to help with that."

# Check for transitions

for transition in self.transitions:

if transition.from_state == self.state:

if transition.condition(self.context):

self.state = transition.to_state

if transition.action:

action_response = transition.action(self.context)

if action_response:

response = action_response

break

return response

# Build a customer support state machine

def greeting_handler(user_input: str, context: dict) -> str:

context["greeted"] = True

return "Hello! How can I help you today? I can assist with orders, returns, or general questions."

def identifying_handler(user_input: str, context: dict) -> str:

intent = classify_intent(user_input)

context["intent"] = intent.intent.value

context["entities"] = intent.entities

if intent.intent == Intent.SUPPORT:

return "I'd be happy to help with that. Could you describe the issue you're experiencing?"

elif intent.intent == Intent.PURCHASE:

return "Great! What product are you interested in?"

elif intent.intent == Intent.COMPLAINT:

return "I'm sorry to hear that. Let me help resolve this. What's your order number?"

else:

return "Could you tell me more about what you need help with?"

def collecting_handler(user_input: str, context: dict) -> str:

# Use slot filling for structured data collection

if "slot_filler" not in context:

if context.get("intent") == "complaint":

context["slot_filler"] = SlotFiller([

Slot(name="order_number", description="Order number"),

Slot(name="issue", description="Description of the issue")

])

filler = context.get("slot_filler")

if filler:

response = filler.process_message(user_input)

if filler.state.completed:

context["collected_data"] = filler.get_filled_slots()

context["info_complete"] = True

return "Thank you. Let me look into this for you."

return response

return "Please provide more details."

def confirming_handler(user_input: str, context: dict) -> str:

if "yes" in user_input.lower() or "confirm" in user_input.lower():

context["confirmed"] = True

return "Your request has been processed. Is there anything else I can help with?"

elif "no" in user_input.lower():

context["confirmed"] = False

return "No problem. What would you like to change?"

return "Please confirm: would you like me to proceed? (yes/no)"

# Setup state machine

sm = ConversationStateMachine()

sm.set_handler(ConversationState.GREETING, greeting_handler)

sm.set_handler(ConversationState.IDENTIFYING_NEED, identifying_handler)

sm.set_handler(ConversationState.COLLECTING_INFO, collecting_handler)

sm.set_handler(ConversationState.CONFIRMING, confirming_handler)

sm.add_transition(Transition(

from_state=ConversationState.GREETING,

to_state=ConversationState.IDENTIFYING_NEED,

condition=lambda ctx: ctx.get("greeted", False)

))

sm.add_transition(Transition(

from_state=ConversationState.IDENTIFYING_NEED,

to_state=ConversationState.COLLECTING_INFO,

condition=lambda ctx: ctx.get("intent") is not None

))

sm.add_transition(Transition(

from_state=ConversationState.COLLECTING_INFO,

to_state=ConversationState.CONFIRMING,

condition=lambda ctx: ctx.get("info_complete", False)

))Context Window Management

from dataclasses import dataclass

import tiktoken

@dataclass

class Message:

role: str

content: str

tokens: int = 0

importance: float = 1.0 # Higher = more important to keep

class ContextManager:

"""Manage conversation context within token limits."""

def __init__(

self,

max_tokens: int = 4000,

model: str = "gpt-4o-mini",

system_prompt: str = None

):

self.max_tokens = max_tokens

self.encoder = tiktoken.encoding_for_model(model)

self.messages: list[Message] = []

self.system_prompt = system_prompt

self.system_tokens = 0

if system_prompt:

self.system_tokens = len(self.encoder.encode(system_prompt))

def count_tokens(self, text: str) -> int:

"""Count tokens in text."""

return len(self.encoder.encode(text))

def add_message(self, role: str, content: str, importance: float = 1.0):

"""Add a message to context."""

tokens = self.count_tokens(content)

self.messages.append(Message(

role=role,

content=content,

tokens=tokens,

importance=importance

))

# Trim if needed

self._trim_context()

def _trim_context(self):

"""Trim context to fit within token limit."""

available = self.max_tokens - self.system_tokens

# Calculate current total

total = sum(m.tokens for m in self.messages)

if total <= available:

return

# Strategy 1: Remove oldest low-importance messages first

# Keep first message (often important) and recent messages

while total > available and len(self.messages) > 2:

# Find lowest importance message (excluding first and last)

candidates = self.messages[1:-1]

if not candidates:

break

lowest = min(candidates, key=lambda m: m.importance)

self.messages.remove(lowest)

total -= lowest.tokens

def get_messages(self) -> list[dict]:

"""Get messages formatted for API call."""

result = []

if self.system_prompt:

result.append({"role": "system", "content": self.system_prompt})

for msg in self.messages:

result.append({"role": msg.role, "content": msg.content})

return result

def summarize_old_context(self):

"""Summarize older messages to save tokens."""

if len(self.messages) < 6:

return

# Take older messages (not the last 4)

old_messages = self.messages[:-4]

if not old_messages:

return

# Create summary

old_text = "\n".join([f"{m.role}: {m.content}" for m in old_messages])

summary_prompt = f"Summarize this conversation history in 2-3 sentences:\n{old_text}"

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": summary_prompt}],

max_tokens=150

)

summary = response.choices[0].message.content

# Replace old messages with summary

summary_msg = Message(

role="system",

content=f"Previous conversation summary: {summary}",

tokens=self.count_tokens(summary),

importance=0.8

)

self.messages = [summary_msg] + self.messages[-4:]

# Usage

context = ContextManager(

max_tokens=4000,

system_prompt="You are a helpful customer support agent."

)

# Add conversation

context.add_message("user", "Hi, I need help with my order")

context.add_message("assistant", "Hello! I'd be happy to help. What's your order number?")

context.add_message("user", "It's ORDER-12345", importance=1.5) # Important info

context.add_message("assistant", "Thank you. Let me look that up for you.")

# Get messages for API call

messages = context.get_messages()Error Recovery and Fallbacks

from typing import Optional

class ConversationRecovery:

"""Handle errors and recover gracefully."""

def __init__(self):

self.error_count = 0

self.max_errors = 3

self.clarification_attempts = 0

self.max_clarifications = 2

def handle_unclear_input(self, user_input: str, context: dict) -> str:

"""Handle unclear or ambiguous input."""

self.clarification_attempts += 1

if self.clarification_attempts >= self.max_clarifications:

self.clarification_attempts = 0

return self._offer_alternatives(context)

# Generate clarifying question

prompt = f"""The user's message is unclear. Generate a helpful clarifying question.

User message: {user_input}

Context: {json.dumps(context.get('recent_topics', []))}

Be specific about what you need clarified."""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

def _offer_alternatives(self, context: dict) -> str:

"""Offer alternative ways to help."""

return """I'm having trouble understanding. Here are some things I can help with:

1. Check order status

2. Process a return

3. Answer product questions

4. Connect you with a human agent

Which would you like?"""

def handle_api_error(self, error: Exception) -> str:

"""Handle API errors gracefully."""

self.error_count += 1

if self.error_count >= self.max_errors:

return "I'm experiencing technical difficulties. Let me connect you with a human agent."

error_type = type(error).__name__

if "rate_limit" in str(error).lower():

return "I'm a bit busy right now. Could you please wait a moment and try again?"

if "timeout" in str(error).lower():

return "That took longer than expected. Let me try again..."

return "I encountered a small issue. Could you please repeat that?"

def handle_out_of_scope(self, user_input: str) -> str:

"""Handle requests outside the bot's capabilities."""

prompt = f"""The user is asking about something outside our scope.

Politely explain what you can help with and redirect.

User message: {user_input}

You can help with: orders, returns, product questions, account issues."""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

def detect_frustration(self, messages: list[dict]) -> bool:

"""Detect if user is frustrated."""

if len(messages) < 2:

return False

recent = " ".join([m["content"] for m in messages[-3:] if m["role"] == "user"])

frustration_indicators = [

"frustrated", "angry", "useless", "terrible",

"waste of time", "speak to human", "real person",

"!!!", "???", "this is ridiculous"

]

return any(ind in recent.lower() for ind in frustration_indicators)

def handle_frustration(self) -> str:

"""Handle frustrated user."""

return """I understand this has been frustrating, and I apologize for the difficulty.

Would you like me to connect you with a human agent who can help resolve this more quickly?"""

# Usage

recovery = ConversationRecovery()

try:

# Attempt to process

response = process_user_input(user_input)

except Exception as e:

response = recovery.handle_api_error(e)

# Check for frustration

if recovery.detect_frustration(conversation_history):

response = recovery.handle_frustration()Production Conversation Service

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Optional

import uuid

app = FastAPI()

# Store conversations

conversations: dict[str, dict] = {}

class ChatRequest(BaseModel):

conversation_id: Optional[str] = None

message: str

class ChatResponse(BaseModel):

conversation_id: str

response: str

intent: Optional[str] = None

state: str

@app.post("/chat", response_model=ChatResponse)

async def chat(request: ChatRequest):

"""Process a chat message."""

# Get or create conversation

if request.conversation_id and request.conversation_id in conversations:

conv = conversations[request.conversation_id]

else:

conv_id = str(uuid.uuid4())

conv = {

"id": conv_id,

"context": ContextManager(

max_tokens=4000,

system_prompt="You are a helpful customer support agent for TechCorp."

),

"state_machine": ConversationStateMachine(),

"recovery": ConversationRecovery()

}

conversations[conv_id] = conv

context = conv["context"]

sm = conv["state_machine"]

recovery = conv["recovery"]

# Add user message

context.add_message("user", request.message)

try:

# Classify intent

intent_result = classify_intent(request.message, context.get_messages())

# Check for frustration

if recovery.detect_frustration(context.get_messages()):

response = recovery.handle_frustration()

else:

# Process through state machine

response = sm.process(request.message)

# Add assistant response

context.add_message("assistant", response)

# Summarize if context is getting long

if len(context.messages) > 10:

context.summarize_old_context()

return ChatResponse(

conversation_id=conv["id"],

response=response,

intent=intent_result.intent.value,

state=sm.state.name

)

except Exception as e:

response = recovery.handle_api_error(e)

return ChatResponse(

conversation_id=conv["id"],

response=response,

state=sm.state.name

)

@app.get("/conversation/{conversation_id}")

async def get_conversation(conversation_id: str):

"""Get conversation history."""

if conversation_id not in conversations:

raise HTTPException(status_code=404, detail="Conversation not found")

conv = conversations[conversation_id]

return {

"id": conversation_id,

"messages": conv["context"].get_messages(),

"state": conv["state_machine"].state.name

}References

- Conversation Design: https://designguidelines.withgoogle.com/conversation/

- Rasa Documentation: https://rasa.com/docs/

- Dialogflow CX: https://cloud.google.com/dialogflow/cx/docs

- LangChain Memory: https://python.langchain.com/docs/modules/memory/

Conclusion

Effective conversation design transforms basic LLM interactions into polished user experiences. Use intent classification to understand what users want and route them appropriately. Implement slot filling for structured data collection without rigid forms. State machines provide predictable conversation flow while maintaining flexibility. Manage context windows carefully to maintain coherence in long conversations. Build robust error recovery to handle unclear inputs, API failures, and frustrated users gracefully. The best conversational AI feels natural and helpful, guiding users to their goals while handling edge cases smoothly.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.