The Data Deluge at the Edge

After two decades of building data systems, I’ve watched the IoT revolution transform from a buzzword into the backbone of modern enterprise operations. The convergence of connected devices and real-time analytics has created opportunities that seemed impossible just a few years ago. But it has also introduced architectural challenges that require careful consideration of data flow, latency requirements, and processing strategies.

The fundamental challenge with IoT data isn’t volume alone—it’s the combination of velocity, variety, and the need for immediate action. A single manufacturing plant might generate terabytes of sensor data daily, but the real value lies in detecting anomalies within milliseconds, not hours. This reality has forced us to rethink traditional data architectures and embrace edge computing, stream processing, and hybrid analytics approaches.

Understanding the IoT Data Pipeline

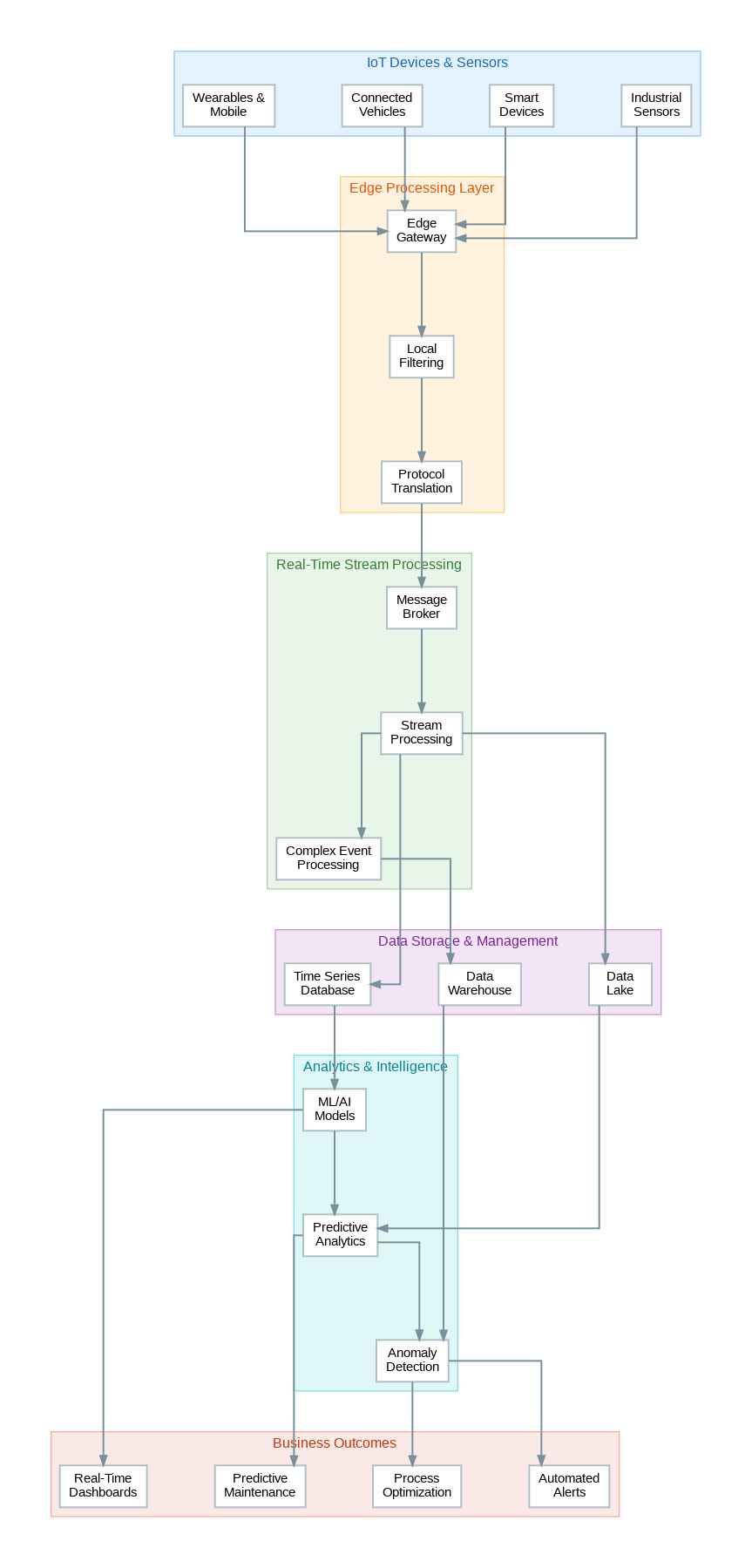

The modern IoT analytics architecture operates across multiple tiers, each serving a specific purpose in the data journey from sensor to insight. At the edge, devices generate raw telemetry—temperature readings, vibration patterns, pressure measurements, location coordinates. This data flows through edge gateways that perform initial filtering and protocol translation before reaching the cloud infrastructure.

Edge processing has become increasingly sophisticated. Rather than simply forwarding all data to the cloud, modern edge gateways run lightweight analytics models that can detect critical conditions locally. This approach reduces bandwidth costs, minimizes latency for time-sensitive decisions, and ensures continued operation even when cloud connectivity is interrupted. I’ve seen manufacturing clients reduce their cloud data transfer costs by 60-70% simply by implementing intelligent edge filtering.

Stream Processing: The Heart of Real-Time Analytics

Once data reaches the cloud, stream processing engines like Apache Kafka, Apache Flink, or cloud-native services like Azure Stream Analytics and AWS Kinesis take over. These systems process millions of events per second, applying complex event processing (CEP) rules to detect patterns that span multiple data streams. The key insight here is that stream processing isn’t just about speed—it’s about maintaining state across time windows while handling out-of-order events and late-arriving data.

In production systems, I’ve found that the choice between hot path and cold path analytics depends heavily on the business use case. Hot path analytics processes data in real-time for immediate action—triggering alerts, adjusting control systems, or updating dashboards. Cold path analytics stores data for batch processing, enabling historical analysis, model training, and trend identification. Most successful IoT implementations require both paths working in harmony.

Production Lessons from Industrial IoT

Working with manufacturing clients has taught me several hard-won lessons about IoT analytics at scale. First, data quality at the edge is paramount. Sensor drift, calibration issues, and intermittent connectivity can corrupt analytics results if not handled properly. Implementing data validation and anomaly detection at the edge gateway level prevents garbage data from polluting downstream systems.

Second, time synchronization across distributed devices is more challenging than most teams anticipate. When correlating events from thousands of sensors, even millisecond-level clock drift can lead to incorrect conclusions. Network Time Protocol (NTP) synchronization and careful handling of timestamps in stream processing are essential for accurate analytics.

Third, the storage strategy must balance cost, query performance, and retention requirements. Time-series databases like InfluxDB or TimescaleDB excel at storing and querying IoT telemetry, but they need to be complemented by data lakes for long-term storage and machine learning workloads. The tiered storage approach—hot storage for recent data, warm storage for intermediate periods, and cold storage for archives—has become the standard pattern.

Predictive Maintenance: Where Analytics Delivers ROI

The most compelling IoT analytics use case I’ve encountered is predictive maintenance. By analyzing vibration patterns, temperature trends, and operational parameters, machine learning models can predict equipment failures days or weeks before they occur. One manufacturing client reduced unplanned downtime by 40% and maintenance costs by 25% within the first year of implementing predictive maintenance analytics.

The key to successful predictive maintenance isn’t just the ML model—it’s the integration with operational workflows. Predictions must flow into work order systems, spare parts inventory management, and maintenance scheduling tools. The analytics system becomes part of a larger operational ecosystem rather than a standalone dashboard.

Security and Privacy Considerations

IoT security deserves special attention because the attack surface is enormous. Every connected device is a potential entry point for malicious actors. Defense in depth—encrypting data in transit and at rest, implementing device authentication, segmenting networks, and monitoring for anomalous behavior—is essential. The analytics system itself can contribute to security by detecting unusual patterns that might indicate compromised devices or data exfiltration attempts.

Looking Forward

The intersection of IoT and data analytics continues to evolve rapidly. Edge AI is enabling more sophisticated analytics closer to the data source. Digital twins—virtual representations of physical assets—are becoming standard for complex systems. And the integration of IoT data with enterprise systems is creating new opportunities for optimization across entire value chains.

For organizations beginning their IoT analytics journey, my advice is to start with a focused use case that delivers clear business value, build the foundational data infrastructure properly, and plan for scale from the beginning. The technology is mature enough for production deployment, but success requires careful attention to architecture, data quality, and operational integration.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.