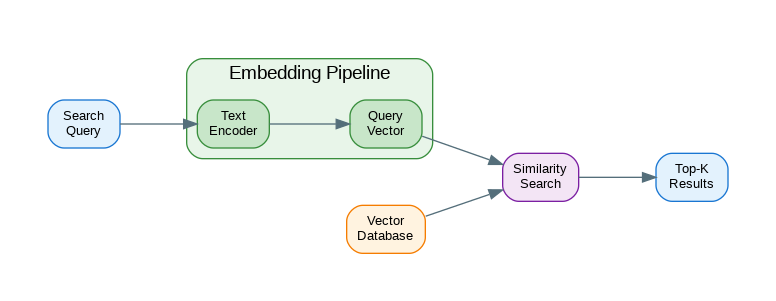

Introduction: Semantic search using embeddings has transformed how we find information. Unlike keyword search, embeddings capture meaning—finding documents about “machine learning” when you search for “AI training.” This guide covers building production embedding search systems: choosing embedding models, computing and storing vectors efficiently, implementing similarity search with various distance metrics, and optimizing for speed and accuracy. Whether you’re building a document search engine, recommendation system, or RAG pipeline, these patterns form the foundation.

Basic Embedding Search

import numpy as np

from openai import OpenAI

client = OpenAI()

def get_embedding(text: str, model: str = "text-embedding-3-small") -> list[float]:

"""Get embedding for a single text."""

response = client.embeddings.create(

model=model,

input=text

)

return response.data[0].embedding

def get_embeddings_batch(texts: list[str], model: str = "text-embedding-3-small") -> list[list[float]]:

"""Get embeddings for multiple texts efficiently."""

response = client.embeddings.create(

model=model,

input=texts

)

# Sort by index to maintain order

return [item.embedding for item in sorted(response.data, key=lambda x: x.index)]

def cosine_similarity(a: list[float], b: list[float]) -> float:

"""Compute cosine similarity between two vectors."""

a = np.array(a)

b = np.array(b)

return np.dot(a, b) / (np.linalg.norm(a) * np.linalg.norm(b))

class SimpleEmbeddingSearch:

"""Basic in-memory embedding search."""

def __init__(self, model: str = "text-embedding-3-small"):

self.model = model

self.documents: list[str] = []

self.embeddings: list[list[float]] = []

self.metadata: list[dict] = []

def add_documents(self, documents: list[str], metadata: list[dict] = None):

"""Add documents to the index."""

embeddings = get_embeddings_batch(documents, self.model)

self.documents.extend(documents)

self.embeddings.extend(embeddings)

self.metadata.extend(metadata or [{} for _ in documents])

def search(self, query: str, top_k: int = 5) -> list[dict]:

"""Search for similar documents."""

query_embedding = get_embedding(query, self.model)

# Compute similarities

similarities = [

cosine_similarity(query_embedding, doc_emb)

for doc_emb in self.embeddings

]

# Get top-k indices

top_indices = np.argsort(similarities)[-top_k:][::-1]

results = []

for idx in top_indices:

results.append({

"document": self.documents[idx],

"score": similarities[idx],

"metadata": self.metadata[idx]

})

return results

# Usage

search = SimpleEmbeddingSearch()

documents = [

"Python is a programming language known for its simplicity.",

"Machine learning models learn patterns from data.",

"Docker containers package applications with dependencies.",

"Kubernetes orchestrates container deployments at scale.",

"Neural networks are inspired by biological neurons."

]

search.add_documents(documents)

results = search.search("How do AI systems learn?", top_k=3)

for r in results:

print(f"{r['score']:.3f}: {r['document'][:50]}...")Efficient Vector Storage with NumPy

import numpy as np

from pathlib import Path

import json

class NumpyVectorStore:

"""Efficient vector storage using NumPy arrays."""

def __init__(self, dimension: int = 1536):

self.dimension = dimension

self.vectors: np.ndarray = np.empty((0, dimension), dtype=np.float32)

self.documents: list[str] = []

self.metadata: list[dict] = []

def add(self, vectors: list[list[float]], documents: list[str], metadata: list[dict] = None):

"""Add vectors to the store."""

new_vectors = np.array(vectors, dtype=np.float32)

self.vectors = np.vstack([self.vectors, new_vectors]) if len(self.vectors) > 0 else new_vectors

self.documents.extend(documents)

self.metadata.extend(metadata or [{} for _ in documents])

def search(self, query_vector: list[float], top_k: int = 10) -> list[dict]:

"""Fast similarity search using matrix operations."""

query = np.array(query_vector, dtype=np.float32)

# Normalize for cosine similarity

query_norm = query / np.linalg.norm(query)

vectors_norm = self.vectors / np.linalg.norm(self.vectors, axis=1, keepdims=True)

# Compute all similarities at once

similarities = np.dot(vectors_norm, query_norm)

# Get top-k

top_indices = np.argpartition(similarities, -top_k)[-top_k:]

top_indices = top_indices[np.argsort(similarities[top_indices])[::-1]]

return [

{

"document": self.documents[i],

"score": float(similarities[i]),

"metadata": self.metadata[i]

}

for i in top_indices

]

def save(self, path: Path):

"""Save to disk."""

path.mkdir(parents=True, exist_ok=True)

np.save(path / "vectors.npy", self.vectors)

with open(path / "documents.json", "w") as f:

json.dump({

"documents": self.documents,

"metadata": self.metadata

}, f)

@classmethod

def load(cls, path: Path) -> "NumpyVectorStore":

"""Load from disk."""

vectors = np.load(path / "vectors.npy")

with open(path / "documents.json") as f:

data = json.load(f)

store = cls(dimension=vectors.shape[1])

store.vectors = vectors

store.documents = data["documents"]

store.metadata = data["metadata"]

return store

# Usage - handles millions of vectors efficiently

store = NumpyVectorStore(dimension=1536)

# Add in batches

for batch in chunks(documents, 100):

embeddings = get_embeddings_batch(batch)

store.add(embeddings, batch)

# Save for later

store.save(Path("./vector_store"))

# Load and search

store = NumpyVectorStore.load(Path("./vector_store"))

results = store.search(query_embedding, top_k=10)FAISS for Large-Scale Search

import faiss

import numpy as np

class FAISSVectorStore:

"""High-performance vector search with FAISS."""

def __init__(self, dimension: int = 1536, index_type: str = "flat"):

self.dimension = dimension

self.documents: list[str] = []

self.metadata: list[dict] = []

# Choose index type

if index_type == "flat":

# Exact search - best accuracy, slower for large datasets

self.index = faiss.IndexFlatIP(dimension)

elif index_type == "ivf":

# Approximate search - faster, slightly less accurate

quantizer = faiss.IndexFlatIP(dimension)

self.index = faiss.IndexIVFFlat(quantizer, dimension, 100)

self.needs_training = True

elif index_type == "hnsw":

# Graph-based - very fast, good accuracy

self.index = faiss.IndexHNSWFlat(dimension, 32)

else:

raise ValueError(f"Unknown index type: {index_type}")

self.needs_training = index_type == "ivf"

def add(self, vectors: list[list[float]], documents: list[str], metadata: list[dict] = None):

"""Add vectors to the index."""

vectors_np = np.array(vectors, dtype=np.float32)

# Normalize for cosine similarity (IndexFlatIP uses inner product)

faiss.normalize_L2(vectors_np)

# Train if needed (IVF index)

if self.needs_training and not self.index.is_trained:

self.index.train(vectors_np)

self.index.add(vectors_np)

self.documents.extend(documents)

self.metadata.extend(metadata or [{} for _ in documents])

def search(self, query_vector: list[float], top_k: int = 10) -> list[dict]:

"""Search for similar vectors."""

query = np.array([query_vector], dtype=np.float32)

faiss.normalize_L2(query)

scores, indices = self.index.search(query, top_k)

results = []

for score, idx in zip(scores[0], indices[0]):

if idx >= 0: # -1 means no result

results.append({

"document": self.documents[idx],

"score": float(score),

"metadata": self.metadata[idx]

})

return results

def save(self, path: str):

"""Save index to disk."""

faiss.write_index(self.index, f"{path}.index")

with open(f"{path}.json", "w") as f:

json.dump({

"documents": self.documents,

"metadata": self.metadata,

"dimension": self.dimension

}, f)

@classmethod

def load(cls, path: str) -> "FAISSVectorStore":

"""Load index from disk."""

with open(f"{path}.json") as f:

data = json.load(f)

store = cls(dimension=data["dimension"])

store.index = faiss.read_index(f"{path}.index")

store.documents = data["documents"]

store.metadata = data["metadata"]

store.needs_training = False

return store

# Usage - handles billions of vectors

store = FAISSVectorStore(dimension=1536, index_type="hnsw")

# Add documents

embeddings = get_embeddings_batch(documents)

store.add(embeddings, documents)

# Search

query_emb = get_embedding("machine learning algorithms")

results = store.search(query_emb, top_k=5)Hybrid Search: Combining Semantic and Keyword

from rank_bm25 import BM25Okapi

import numpy as np

class HybridSearch:

"""Combine semantic and keyword search."""

def __init__(self, semantic_weight: float = 0.7):

self.semantic_weight = semantic_weight

self.keyword_weight = 1 - semantic_weight

self.documents: list[str] = []

self.embeddings: list[list[float]] = []

self.bm25: BM25Okapi = None

def add_documents(self, documents: list[str]):

"""Index documents for both search types."""

self.documents = documents

# Semantic embeddings

self.embeddings = get_embeddings_batch(documents)

# BM25 keyword index

tokenized = [doc.lower().split() for doc in documents]

self.bm25 = BM25Okapi(tokenized)

def search(self, query: str, top_k: int = 10) -> list[dict]:

"""Hybrid search combining both methods."""

# Semantic search

query_embedding = get_embedding(query)

semantic_scores = np.array([

cosine_similarity(query_embedding, emb)

for emb in self.embeddings

])

# Normalize to 0-1

semantic_scores = (semantic_scores - semantic_scores.min()) / (semantic_scores.max() - semantic_scores.min() + 1e-8)

# Keyword search

keyword_scores = np.array(self.bm25.get_scores(query.lower().split()))

keyword_scores = (keyword_scores - keyword_scores.min()) / (keyword_scores.max() - keyword_scores.min() + 1e-8)

# Combine scores

combined_scores = (

self.semantic_weight * semantic_scores +

self.keyword_weight * keyword_scores

)

# Get top-k

top_indices = np.argsort(combined_scores)[-top_k:][::-1]

return [

{

"document": self.documents[i],

"score": float(combined_scores[i]),

"semantic_score": float(semantic_scores[i]),

"keyword_score": float(keyword_scores[i])

}

for i in top_indices

]

# Usage

hybrid = HybridSearch(semantic_weight=0.6)

hybrid.add_documents(documents)

# Semantic query benefits from embeddings

results = hybrid.search("AI training methods")

# Exact term query benefits from BM25

results = hybrid.search("Kubernetes container orchestration")Production Search Service

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

import asyncio

from functools import lru_cache

app = FastAPI()

class SearchRequest(BaseModel):

query: str

top_k: int = 10

filter: dict = None

class SearchResult(BaseModel):

document: str

score: float

metadata: dict

class SearchResponse(BaseModel):

results: list[SearchResult]

query_time_ms: float

# Singleton store

_store: FAISSVectorStore = None

def get_store() -> FAISSVectorStore:

global _store

if _store is None:

_store = FAISSVectorStore.load("./production_index")

return _store

# Cache embeddings for repeated queries

@lru_cache(maxsize=1000)

def cached_embedding(query: str) -> tuple:

return tuple(get_embedding(query))

@app.post("/search", response_model=SearchResponse)

async def search(request: SearchRequest):

import time

start = time.time()

store = get_store()

# Get query embedding (cached)

query_embedding = list(cached_embedding(request.query))

# Search

results = store.search(query_embedding, request.top_k * 2) # Over-fetch for filtering

# Apply metadata filters

if request.filter:

results = [

r for r in results

if all(r["metadata"].get(k) == v for k, v in request.filter.items())

]

results = results[:request.top_k]

return SearchResponse(

results=[SearchResult(**r) for r in results],

query_time_ms=(time.time() - start) * 1000

)

@app.post("/index")

async def add_to_index(documents: list[str], metadata: list[dict] = None):

store = get_store()

embeddings = get_embeddings_batch(documents)

store.add(embeddings, documents, metadata)

# Persist

store.save("./production_index")

return {"indexed": len(documents)}References

- OpenAI Embeddings: https://platform.openai.com/docs/guides/embeddings

- FAISS: https://github.com/facebookresearch/faiss

- Sentence Transformers: https://www.sbert.net/

- Pinecone: https://www.pinecone.io/

Conclusion

Embedding search enables finding information by meaning rather than keywords. Start with simple NumPy-based search for prototyping—it handles thousands of documents well. Move to FAISS for larger datasets; the HNSW index offers excellent speed-accuracy tradeoffs for millions of vectors. Consider hybrid search combining semantic and keyword approaches for the best of both worlds. Cache query embeddings to reduce API costs and latency. For production, add metadata filtering, result re-ranking, and proper persistence. The embedding model matters—text-embedding-3-small is cost-effective for most use cases, while text-embedding-3-large provides better accuracy for demanding applications. Test with your actual data to find the right balance of speed, accuracy, and cost.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.