Older days we used to manage azure resources through AzureRM PowerShell modules . This was very much flexible for any Azure Administrator or Developers to run Automated Deployments to Azure Resource Manager resources. Azure CLI is the next improved version with simplified cmdlets to make life easier and it is cross-platform. You can use Azure […]

Read more →Month: September 2018

NDepend–VSTS/Azure DevOps Integration–Part 01

In my previous article I wrote an introductory about NDepend and how it will be useful for Agile Team to ensure code quality. In that article we found how we can use NDepend in a developer machine. Now with this article we will familiarize ourselves in using NDepend in your build automation pipeline in your […]

Read more →New Microsoft Azure Certifications

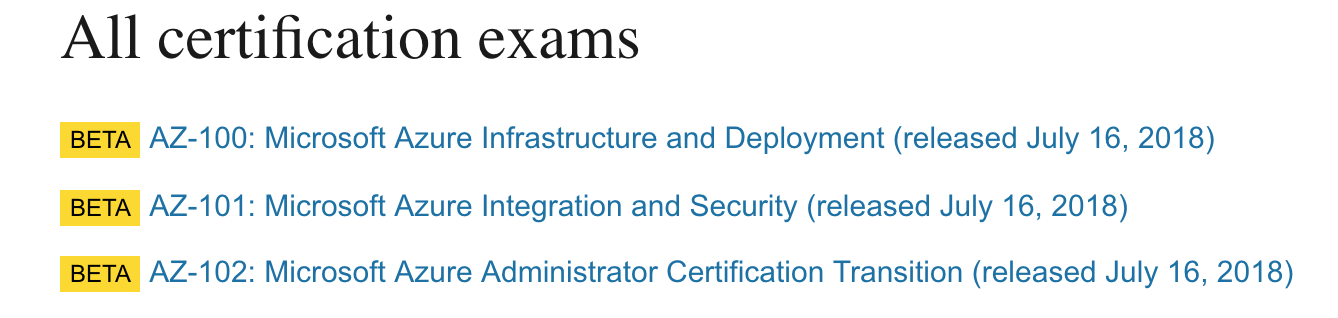

Microsoft has recently announced new certification exam tracks for Azure Administrators, Developers and Architects. Here are the line ups that should help you move your career with right certifications. The three new Microsoft Azure Certifications are: Microsoft Certified Azure Developer Microsoft Certified Azure Administrator Microsoft Certified Azure Architect These certifications would essentially split the previous […]

Read more →