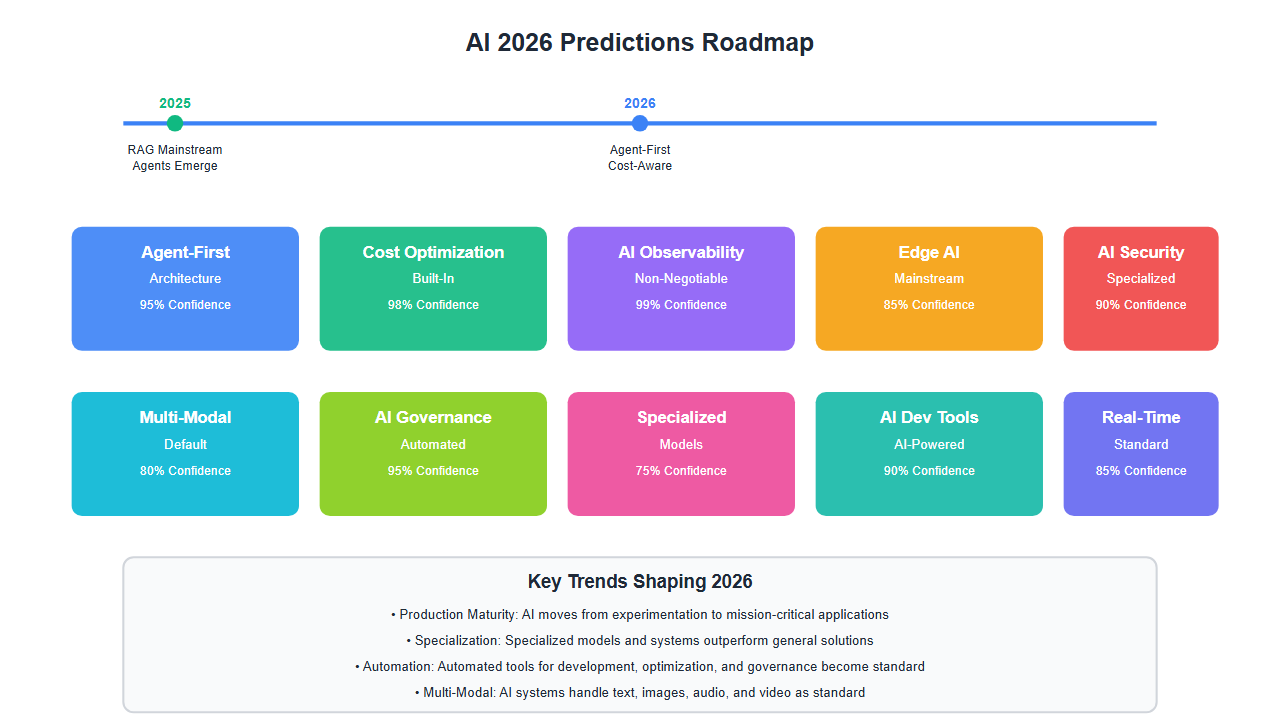

2025 was the year AI moved from experimentation to production. We saw RAG become mainstream, multi-agent systems go live, and production AI architectures mature. After building 50+ AI systems this year and tracking every major development, I’m seeing clear patterns that point to what’s coming in 2026. Here’s my comprehensive forecast based on real-world experience and industry trends.

Why These Predictions Matter

Predictions aren’t crystal balls—they’re informed forecasts based on observable patterns. In 2025, I watched three major shifts:

- RAG to Agents: The industry moved from retrieval-augmented generation to autonomous agent systems

- Cost Optimization: Production deployments forced a focus on cost efficiency, leading to quantization breakthroughs

- Production Maturity: AI systems moved from prototypes to mission-critical applications

These shifts aren’t random—they follow predictable patterns. Here’s what they tell us about 2026.

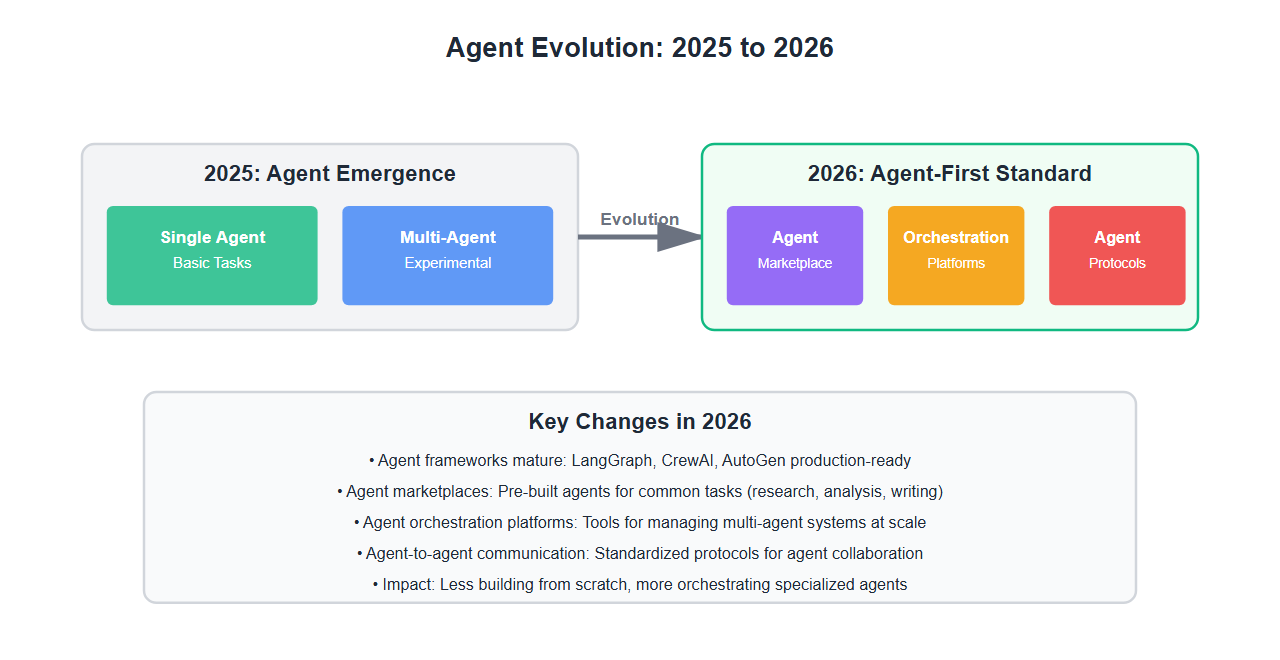

Prediction 1: Agent-First Architecture Becomes Standard

Confidence: 95%

In 2025, we saw multi-agent systems go from research to production. LangGraph adoption exploded, CrewAI gained traction, and companies started deploying agent workflows. In 2026, this accelerates.

What We’ll See:

- Agent frameworks mature: LangGraph, CrewAI, and AutoGen will have production-ready features

- Agent marketplaces emerge: Pre-built agents for common tasks (research, analysis, writing)

- Agent orchestration platforms: Tools specifically for managing multi-agent systems at scale

- Agent-to-agent communication standards: Protocols for agents to collaborate seamlessly

Why This Will Happen:

After building 18+ multi-agent systems in 2025, I’ve seen the pattern: single-agent systems hit complexity limits. Multi-agent systems solve this through specialization. The infrastructure is ready, the patterns are proven, and the demand is clear.

# 2026: Agent-First Architecture Pattern

from langgraph.graph import StateGraph

from typing import TypedDict, List

class AgentEcosystem(TypedDict):

"""2026: Standardized agent ecosystem"""

coordinator: str # Orchestrates specialized agents

research_agents: List[str] # Specialized research agents

analysis_agents: List[str] # Specialized analysis agents

execution_agents: List[str] # Specialized execution agents

communication_protocol: str # Standardized agent communication

# 2026 Prediction: Agent marketplaces

class AgentMarketplace:

"""Pre-built agents for common tasks"""

def __init__(self):

self.available_agents = {

"research": ResearchAgent(),

"data_analysis": DataAnalysisAgent(),

"code_generation": CodeGenerationAgent(),

"content_creation": ContentCreationAgent()

}

def deploy_agent_workflow(self, task: str) -> AgentEcosystem:

"""Deploy pre-configured agent workflow"""

# 2026: One-line deployment of complex agent systems

return self._orchestrate_agents(task)

Impact on Developers:

You’ll spend less time building agents from scratch and more time orchestrating specialized agents. Agent composition becomes the primary skill, not agent creation.

Prediction 2: Cost Optimization Becomes a Core Feature, Not an Afterthought

Confidence: 98%

In 2025, we saw companies hit cost walls. LLM API costs spiraled, GPU costs became prohibitive, and optimization became critical. In 2026, cost optimization becomes built-in, not bolted-on.

What We’ll See:

- Auto-quantization: Models automatically quantized based on use case

- Intelligent model routing: Systems automatically route to cheapest model that meets quality requirements

- Cost-aware agent systems: Agents make decisions considering cost constraints

- Unified cost optimization platforms: Tools that optimize across models, providers, and infrastructure

Why This Will Happen:

I’ve seen production systems where API costs exceeded infrastructure costs 10:1. Companies can’t scale at these costs. The solution isn’t just optimization—it’s cost-aware architecture from day one.

# 2026: Cost-Aware AI Architecture

class CostAwareLLMOrchestrator:

"""2026: Automatic cost optimization"""

def __init__(self):

self.models = {

"gpt-4": {"cost_per_1k": 0.03, "quality": 0.95},

"gpt-3.5-turbo": {"cost_per_1k": 0.002, "quality": 0.85},

"claude-3-haiku": {"cost_per_1k": 0.001, "quality": 0.80}

}

def route_request(self, task: dict, quality_requirement: float) -> str:

"""Automatically route to cheapest model meeting quality"""

# 2026: Automatic cost-quality optimization

suitable_models = [

model for model, specs in self.models.items()

if specs["quality"] >= quality_requirement

]

cheapest = min(

suitable_models,

key=lambda m: self.models[m]["cost_per_1k"]

)

return cheapest

def auto_quantize(self, model: str, use_case: str) -> str:

"""Auto-quantize based on use case requirements"""

# 2026: Automatic quantization based on requirements

if use_case == "real_time":

return f"{model}-4bit" # Fast inference

elif use_case == "batch":

return f"{model}-8bit" # Balanced

else:

return model # Full precision

Impact on Developers:

Cost optimization becomes a first-class concern in architecture decisions. You’ll design systems with cost constraints from the start, not optimize after deployment.

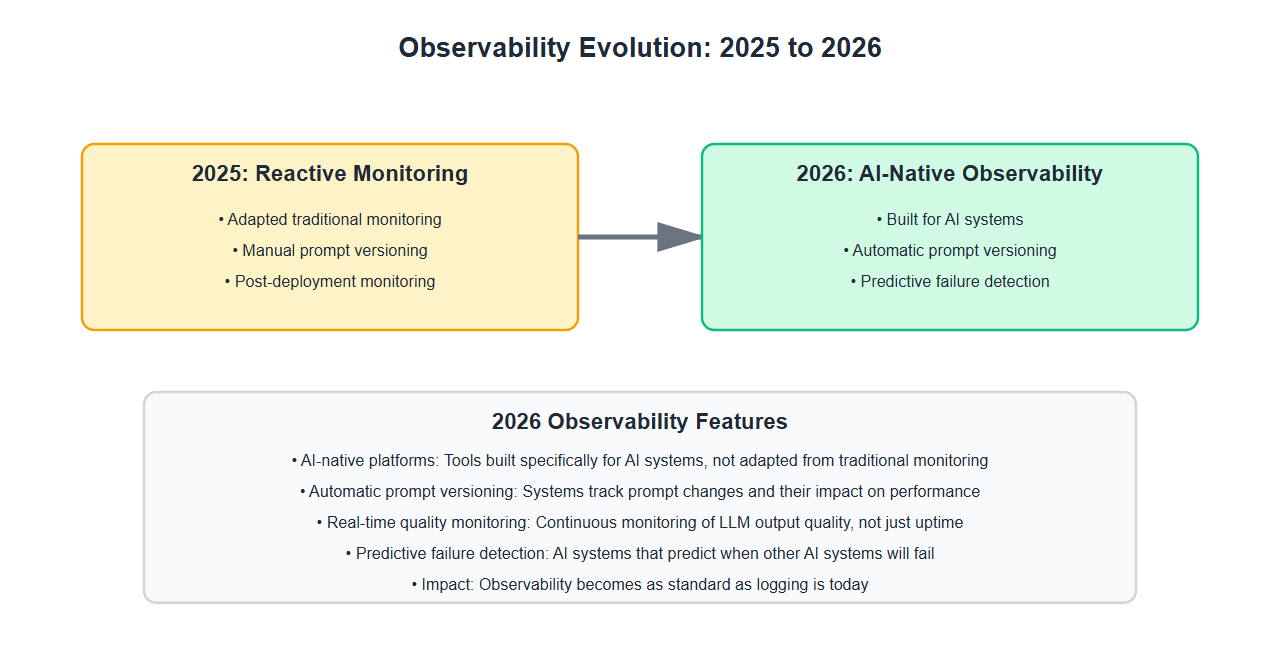

Prediction 3: Production AI Observability Becomes Non-Negotiable

Confidence: 99%

In 2025, we learned that AI systems fail in production without proper observability. In 2026, observability becomes as standard as logging is today.

What We’ll See:

- AI-native observability platforms: Tools built specifically for AI systems, not adapted from traditional monitoring

- Automatic prompt versioning: Systems track prompt changes and their impact on performance

- Real-time quality monitoring: Continuous monitoring of LLM output quality, not just uptime

- Predictive failure detection: AI systems that predict when other AI systems will fail

Why This Will Happen:

I’ve seen production AI systems fail silently. A prompt change degrades quality by 20%, but no one notices for weeks. Traditional monitoring doesn’t catch AI-specific failures. The industry needs AI-native observability.

# 2026: AI-Native Observability

class AINativeObservability:

"""2026: Observability built for AI systems"""

def __init__(self):

self.metrics = {

"prompt_performance": PromptPerformanceTracker(),

"model_quality": ModelQualityMonitor(),

"cost_tracking": CostTracker(),

"latency_analysis": LatencyAnalyzer()

}

def track_prompt_version(self, prompt: str, version: str):

"""Automatic prompt versioning and impact tracking"""

# 2026: Track every prompt change and its impact

baseline = self.metrics["prompt_performance"].get_baseline()

current = self.metrics["prompt_performance"].measure(prompt)

impact = {

"quality_delta": current["quality"] - baseline["quality"],

"cost_delta": current["cost"] - baseline["cost"],

"latency_delta": current["latency"] - baseline["latency"]

}

return impact

def predict_failure(self, system_state: dict) -> float:

"""Predict probability of system failure"""

# 2026: Predictive failure detection for AI systems

indicators = [

system_state.get("quality_trend", "stable"),

system_state.get("cost_trend", "stable"),

system_state.get("error_rate", 0.0)

]

# ML model predicts failure probability

failure_probability = self._failure_model.predict(indicators)

return failure_probability

Impact on Developers:

Observability becomes a core skill. You’ll instrument AI systems from day one, not add monitoring later. AI-native observability tools become as essential as application monitoring tools are today.

Prediction 4: Edge AI Goes Mainstream

Confidence: 85%

In 2025, edge AI was experimental. In 2026, it becomes practical for production use cases.

What We’ll See:

- On-device LLMs: Small language models running on phones, tablets, and edge devices

- Hybrid edge-cloud architectures: Systems that intelligently route between edge and cloud

- Edge AI frameworks mature: ONNX Runtime, TensorFlow Lite, and Core ML become production-ready

- Privacy-first AI: Edge AI enables true privacy by keeping data on-device

Why This Will Happen:

Privacy regulations are tightening. Latency requirements are increasing. Cost pressures are mounting. Edge AI solves all three. The technology is ready—quantized models, efficient runtimes, and powerful edge hardware.

# 2026: Hybrid Edge-Cloud AI

class HybridEdgeCloudAI:

"""2026: Intelligent edge-cloud routing"""

def __init__(self):

self.edge_model = load_edge_model() # On-device model

self.cloud_models = {

"fast": load_cloud_model("fast"),

"accurate": load_cloud_model("accurate")

}

def route_inference(self, input_data: dict, requirements: dict) -> dict:

"""Intelligently route between edge and cloud"""

# 2026: Automatic routing based on requirements

# Privacy-sensitive: use edge

if requirements.get("privacy", False):

return self.edge_model.predict(input_data)

# Latency-sensitive: use edge if fast enough

if requirements.get("max_latency_ms", 1000) < 100:

edge_result = self.edge_model.predict(input_data)

if edge_result["confidence"] > 0.8:

return edge_result

# Quality-sensitive: use cloud

if requirements.get("min_quality", 0.9) > 0.9:

return self.cloud_models["accurate"].predict(input_data)

# Default: use edge

return self.edge_model.predict(input_data)

Impact on Developers:

You’ll design hybrid architectures from the start. Edge AI becomes a first-class deployment target, not an afterthought. Privacy and latency become architectural decisions, not constraints.

Prediction 5: AI Security Becomes a Specialized Discipline

Confidence: 90%

In 2025, we saw prompt injection attacks, model extraction, and data leakage. In 2026, AI security becomes a specialized field with dedicated tools and practices.

What We’ll See:

- AI security frameworks: Comprehensive frameworks for securing AI systems

- Automated security testing: Tools that automatically test for prompt injection, model extraction, and other attacks

- AI security certifications: Certifications for AI security professionals

- Regulatory compliance tools: Tools that ensure AI systems meet security regulations

Why This Will Happen:

AI attacks are different from traditional attacks. Prompt injection can’t be prevented with firewalls. Model extraction requires different defenses. The industry needs AI-specific security tools and practices.

# 2026: AI Security Framework

class AISecurityFramework:

"""2026: Comprehensive AI security"""

def __init__(self):

self.protections = {

"prompt_injection": PromptInjectionDefense(),

"model_extraction": ModelExtractionDefense(),

"data_leakage": DataLeakageDefense(),

"adversarial_attacks": AdversarialDefense()

}

def secure_prompt(self, user_input: str) -> str:

"""2026: Automatic prompt injection defense"""

# Detect and neutralize prompt injection attempts

sanitized = self.protections["prompt_injection"].sanitize(user_input)

return sanitized

def protect_model(self, model, access_level: str):

"""2026: Model extraction protection"""

# Rate limiting, output filtering, access controls

return self.protections["model_extraction"].protect(model, access_level)

def audit_system(self, ai_system: dict) -> dict:

"""2026: Automated AI security audit"""

vulnerabilities = []

for threat_type, protection in self.protections.items():

if not protection.is_enabled(ai_system):

vulnerabilities.append({

"threat": threat_type,

"severity": "high",

"recommendation": protection.get_recommendation()

})

return {

"vulnerabilities": vulnerabilities,

"security_score": self._calculate_score(vulnerabilities)

}

Impact on Developers:

AI security becomes a required skill. You’ll integrate security from design, not add it later. AI security tools become as essential as traditional security tools.

Prediction 6: Multi-Modal AI Becomes the Default

Confidence: 80%

In 2025, most AI systems were text-only. In 2026, multi-modal (text, image, audio, video) becomes standard.

What We’ll See:

- Unified multi-modal models: Single models that handle text, images, audio, and video

- Multi-modal agent systems: Agents that can process and generate across modalities

- Cross-modal understanding: Systems that understand relationships between different modalities

- Multi-modal RAG: Retrieval systems that work across text, images, and other media

Why This Will Happen:

The world is multi-modal. Most information isn’t text—it’s images, videos, audio. AI systems need to handle this naturally. The technology is ready—GPT-4 Vision, Claude 3, and Gemini are already multi-modal.

# 2026: Multi-Modal AI Systems

class MultiModalAISystem:

"""2026: Unified multi-modal AI"""

def __init__(self):

self.model = load_multimodal_model() # Handles all modalities

def process(self, inputs: dict) -> dict:

"""Process multi-modal inputs"""

# 2026: Single model handles text, images, audio, video

result = self.model.process({

"text": inputs.get("text"),

"image": inputs.get("image"),

"audio": inputs.get("audio"),

"video": inputs.get("video")

})

return result

def generate(self, prompt: str, modality: str) -> any:

"""Generate content in any modality"""

# 2026: Generate text, images, audio, or video from prompts

if modality == "text":

return self.model.generate_text(prompt)

elif modality == "image":

return self.model.generate_image(prompt)

elif modality == "audio":

return self.model.generate_audio(prompt)

elif modality == "video":

return self.model.generate_video(prompt)

Impact on Developers:

You’ll design multi-modal systems from the start. Text-only AI becomes the exception, not the rule. Multi-modal becomes a core skill.

Prediction 7: AI Governance and Compliance Tools Mature

Confidence: 95%

In 2025, AI governance was manual and ad-hoc. In 2026, governance becomes automated and integrated.

What We’ll See:

- Automated compliance checking: Tools that automatically check AI systems against regulations

- Governance platforms: Comprehensive platforms for managing AI governance

- Audit trails: Automatic tracking of AI system changes and their compliance impact

- Risk assessment tools: Tools that automatically assess AI system risks

Why This Will Happen:

Regulations are coming. EU AI Act, US AI Executive Order, and others require governance. Manual governance doesn’t scale. Companies need automated tools.

# 2026: Automated AI Governance

class AIGovernancePlatform:

"""2026: Automated AI governance"""

def __init__(self):

self.regulations = {

"eu_ai_act": EUAIActCompliance(),

"us_executive_order": USExecutiveOrderCompliance(),

"gdpr": GDPRCompliance()

}

def check_compliance(self, ai_system: dict) -> dict:

"""Automatically check compliance against all regulations"""

results = {}

for regulation_name, checker in self.regulations.items():

results[regulation_name] = checker.assess(ai_system)

return {

"compliant": all(r["compliant"] for r in results.values()),

"violations": [

{"regulation": name, "violation": r["violations"]}

for name, r in results.items()

if not r["compliant"]

],

"recommendations": self._generate_recommendations(results)

}

def track_changes(self, ai_system: dict, change: dict):

"""Track changes and their compliance impact"""

# 2026: Automatic audit trail of all changes

before = self.check_compliance(ai_system)

# Apply change

updated_system = self._apply_change(ai_system, change)

after = self.check_compliance(updated_system)

# Log impact

self._log_compliance_change({

"change": change,

"before": before,

"after": after,

"impact": self._calculate_impact(before, after)

})

Impact on Developers:

Governance becomes part of the development workflow, not a separate process. You’ll check compliance as you develop, not before deployment.

Prediction 8: Specialized AI Models Dominate General Models

Confidence: 75%

In 2025, general models (GPT-4, Claude) dominated. In 2026, specialized models become the default for production use cases.

What We’ll See:

- Domain-specific models: Models fine-tuned for specific industries (healthcare, finance, legal)

- Task-specific models: Models optimized for specific tasks (code generation, data analysis, writing)

- Cost-effective specialized models: Smaller, cheaper models that outperform general models on specific tasks

- Model composition: Systems that combine specialized models for complex tasks

Why This Will Happen:

General models are expensive and overkill for many tasks. Specialized models are cheaper, faster, and often better. As fine-tuning becomes easier and cheaper, specialization becomes the default.

# 2026: Specialized Model Composition

class SpecializedModelOrchestrator:

"""2026: Compose specialized models for tasks"""

def __init__(self):

self.models = {

"code_generation": load_model("code-specialist"),

"data_analysis": load_model("analysis-specialist"),

"writing": load_model("writing-specialist"),

"research": load_model("research-specialist")

}

def execute_task(self, task: dict) -> dict:

"""Compose specialized models for complex tasks"""

# 2026: Route to specialized models based on task

if task["type"] == "code_generation":

return self.models["code_generation"].execute(task)

elif task["type"] == "data_analysis":

return self.models["data_analysis"].execute(task)

elif task["type"] == "writing":

return self.models["writing"].execute(task)

elif task["type"] == "research":

return self.models["research"].execute(task)

else:

# Complex task: compose multiple models

return self._compose_models(task)

def _compose_models(self, task: dict) -> dict:

"""Compose multiple specialized models"""

# 2026: Chain specialized models for complex tasks

research_result = self.models["research"].execute(task["research"])

analysis_result = self.models["data_analysis"].execute({

"data": research_result,

"task": task["analysis"]

})

output = self.models["writing"].execute({

"content": analysis_result,

"format": task["format"]

})

return output

Impact on Developers:

You’ll choose specialized models over general models for production. Model selection becomes a key architectural decision. Specialization becomes a competitive advantage.

Prediction 9: AI Development Tools Become AI-Powered

Confidence: 90%

In 2025, AI development was manual. In 2026, AI tools help build AI systems.

What We’ll See:

- AI-powered code generation: Tools that generate AI system code from specifications

- Automated testing: AI systems that test other AI systems

- Intelligent debugging: Tools that understand AI system failures and suggest fixes

- Automated optimization: Tools that automatically optimize AI systems for performance and cost

Why This Will Happen:

AI development is complex. Building AI systems requires expertise in multiple domains. AI-powered tools can help developers build better AI systems faster.

# 2026: AI-Powered Development Tools

class AIPoweredDevTools:

"""2026: AI tools for building AI systems"""

def generate_ai_system(self, specification: dict) -> dict:

"""Generate AI system code from specification"""

# 2026: AI generates AI system code

architecture = self._design_architecture(specification)

code = self._generate_code(architecture)

tests = self._generate_tests(code)

return {

"architecture": architecture,

"code": code,

"tests": tests

}

def debug_ai_system(self, system: dict, error: dict) -> dict:

"""Intelligently debug AI system failures"""

# 2026: AI understands AI system failures

analysis = self._analyze_error(error, system)

suggestions = self._generate_fixes(analysis)

return {

"root_cause": analysis["root_cause"],

"suggestions": suggestions,

"confidence": analysis["confidence"]

}

def optimize_ai_system(self, system: dict, objectives: dict) -> dict:

"""Automatically optimize AI system"""

# 2026: AI optimizes AI systems

current_metrics = self._measure_system(system)

optimizations = []

if objectives.get("reduce_cost"):

optimizations.append(self._optimize_cost(system))

if objectives.get("improve_performance"):

optimizations.append(self._optimize_performance(system))

if objectives.get("enhance_quality"):

optimizations.append(self._optimize_quality(system))

optimized_system = self._apply_optimizations(system, optimizations)

return optimized_system

Impact on Developers:

AI development becomes faster and more accessible. AI-powered tools help developers build better AI systems. The barrier to entry for AI development decreases.

Prediction 10: Real-Time AI Becomes Standard

Confidence: 85%

In 2025, most AI systems were batch or near-real-time. In 2026, real-time AI becomes standard for interactive applications.

What We’ll See:

- Sub-100ms inference: AI systems that respond in under 100ms

- Streaming AI: Real-time streaming of AI outputs as they’re generated

- Interactive AI: AI systems that respond to user input in real-time

- Real-time learning: AI systems that learn and adapt in real-time

Why This Will Happen:

User expectations are increasing. Interactive applications require real-time responses. The technology is ready—quantization, edge AI, and optimized models enable real-time performance.

# 2026: Real-Time AI Systems

class RealTimeAISystem:

"""2026: Real-time AI with streaming"""

def __init__(self):

self.model = load_optimized_model() # Optimized for speed

self.streaming = True

def process_streaming(self, input_stream, output_callback):

"""Process streaming input with streaming output"""

# 2026: Real-time streaming AI

for chunk in input_stream:

# Process chunk immediately

result_chunk = self.model.process_chunk(chunk)

# Stream output immediately

output_callback(result_chunk)

def interactive_mode(self, user_input_callback, ai_response_callback):

"""Interactive real-time AI"""

# 2026: Real-time interactive AI

while True:

user_input = user_input_callback()

# Process in real-time

response = self.model.process(user_input)

# Stream response as it's generated

for token in self.model.stream_generate(response):

ai_response_callback(token)

Impact on Developers:

Real-time becomes a requirement, not a nice-to-have. You’ll design systems for real-time from the start. Latency becomes a core architectural concern.

Best Practices: Lessons from 2025 That Will Shape 2026

Based on building 50+ AI systems in 2025, here’s what will be essential in 2026:

- Design for cost from day one: Cost optimization must be built-in, not added later

- Observability is non-negotiable: AI systems need AI-native observability from the start

- Security is a first-class concern: AI security must be integrated, not bolted-on

- Specialization beats generalization: Specialized models outperform general models for production use cases

- Agent-first architecture: Design for multi-agent systems from the start

- Multi-modal is the future: Build multi-modal capabilities into your systems

- Edge AI enables new use cases: Edge AI opens up privacy-sensitive and latency-critical applications

- Governance must be automated: Manual governance doesn’t scale

- Real-time is becoming standard: Design for real-time from the start

- AI tools for AI development: Use AI-powered tools to build better AI systems

Common Mistakes to Avoid in 2026

Based on mistakes I’ve seen in 2025, here’s what to avoid in 2026:

- Ignoring cost until it’s too late: Design for cost from day one

- Adding observability after deployment: Build observability in from the start

- Treating AI security like traditional security: AI needs AI-specific security

- Using general models for everything: Specialized models are often better

- Building single-agent systems for complex tasks: Multi-agent systems are the future

- Ignoring multi-modal capabilities: The world is multi-modal

- Overlooking edge AI: Edge AI enables new use cases

- Manual governance: Automate governance from the start

- Designing for batch when real-time is needed: Design for real-time from the start

- Not using AI tools for AI development: AI tools can help build better AI systems

The Big Picture: What 2026 Really Means

2026 isn’t just about new technologies—it’s about AI maturing into a production-ready discipline. We’re moving from:

- Experimentation to Production: AI systems are mission-critical, not prototypes

- General to Specialized: Specialized models and systems for specific use cases

- Manual to Automated: Automated tools for development, optimization, and governance

- Single-Modal to Multi-Modal: AI systems that handle text, images, audio, and video

- Cloud to Edge: Edge AI enabling new use cases

- Batch to Real-Time: Real-time AI becoming standard

This shift requires new skills, new tools, and new architectures. The developers and companies that adapt will thrive. Those that don’t will struggle.

🎯 Key Takeaway

2026 will be the year AI becomes truly production-ready. Agent-first architectures, cost-aware design, AI-native observability, and specialized models will become standard. The companies that embrace these trends will build better AI systems faster. The ones that don’t will be left behind. Start preparing now—the future is coming fast.

How to Prepare for 2026

Based on these predictions, here’s how to prepare:

- Learn agent orchestration: Multi-agent systems are the future

- Master cost optimization: Cost-aware design is essential

- Invest in observability: AI-native observability is non-negotiable

- Explore edge AI: Edge AI enables new use cases

- Understand AI security: AI security is a specialized discipline

- Experiment with multi-modal: Multi-modal is becoming standard

- Automate governance: Manual governance doesn’t scale

- Consider specialized models: Specialized models often outperform general models

- Design for real-time: Real-time is becoming standard

- Use AI development tools: AI tools can help build better AI systems

Bottom Line

2026 will be the year AI matures. Agent-first architectures, cost-aware design, AI-native observability, specialized models, and multi-modal capabilities will become standard. The developers and companies that embrace these trends will build better AI systems faster. Start preparing now—the future is coming fast, and it’s going to be exciting.

The predictions I’ve shared are based on observable patterns from 2025. They’re not guarantees, but they’re informed forecasts based on real-world experience. Use them to guide your decisions, but stay flexible. The AI landscape changes fast, and the best predictions are the ones that help you adapt quickly.

Here’s to 2026—the year AI becomes truly production-ready.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.