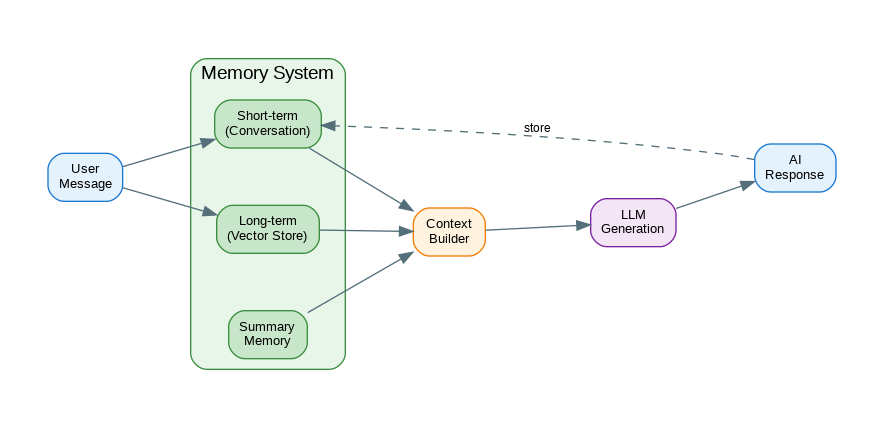

Introduction: Chatbots without memory feel robotic—they forget your name, repeat questions, and lose context mid-conversation. Production chatbots need sophisticated memory systems: short-term memory for the current conversation, long-term memory for user preferences and history, and summary memory to compress long interactions. This guide covers implementing these memory patterns: conversation buffers, vector-based retrieval, automatic summarization, and hybrid approaches that combine multiple memory types for natural, context-aware conversations.

Basic Conversation Memory

from dataclasses import dataclass, field

from datetime import datetime

from typing import Optional

from openai import OpenAI

client = OpenAI()

@dataclass

class Message:

role: str # "user" or "assistant"

content: str

timestamp: datetime = field(default_factory=datetime.now)

class ConversationMemory:

"""Simple conversation buffer memory."""

def __init__(self, max_messages: int = 20):

self.messages: list[Message] = []

self.max_messages = max_messages

def add(self, role: str, content: str):

"""Add a message to memory."""

self.messages.append(Message(role=role, content=content))

# Trim if too long

if len(self.messages) > self.max_messages:

self.messages = self.messages[-self.max_messages:]

def get_messages(self) -> list[dict]:

"""Get messages in OpenAI format."""

return [

{"role": m.role, "content": m.content}

for m in self.messages

]

def clear(self):

"""Clear conversation history."""

self.messages = []

class SimpleChatbot:

"""Chatbot with conversation memory."""

def __init__(self, system_prompt: str = "You are a helpful assistant."):

self.system_prompt = system_prompt

self.memory = ConversationMemory()

def chat(self, user_message: str) -> str:

"""Send a message and get a response."""

# Add user message to memory

self.memory.add("user", user_message)

# Build messages with system prompt

messages = [{"role": "system", "content": self.system_prompt}]

messages.extend(self.memory.get_messages())

# Get response

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

assistant_message = response.choices[0].message.content

# Add assistant response to memory

self.memory.add("assistant", assistant_message)

return assistant_message

# Usage

bot = SimpleChatbot("You are a friendly coding assistant.")

print(bot.chat("Hi, I'm Alex!"))

print(bot.chat("What's my name?")) # Should remember "Alex"

print(bot.chat("Can you help me with Python?"))Summary Memory for Long Conversations

class SummaryMemory:

"""Memory that summarizes old conversations."""

def __init__(

self,

recent_messages: int = 10,

summarize_threshold: int = 20

):

self.messages: list[Message] = []

self.summary: str = ""

self.recent_count = recent_messages

self.summarize_threshold = summarize_threshold

def add(self, role: str, content: str):

"""Add message, summarizing if needed."""

self.messages.append(Message(role=role, content=content))

# Check if we need to summarize

if len(self.messages) > self.summarize_threshold:

self._summarize_old_messages()

def _summarize_old_messages(self):

"""Summarize older messages to compress history."""

# Keep recent messages

old_messages = self.messages[:-self.recent_count]

self.messages = self.messages[-self.recent_count:]

# Build text to summarize

conversation_text = "\n".join(

f"{m.role}: {m.content}" for m in old_messages

)

# Generate summary

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "user",

"content": f"""Summarize this conversation, preserving key facts, user preferences, and important context:

{conversation_text}

Previous summary: {self.summary}

Provide a concise summary:"""

}],

max_tokens=500

)

self.summary = response.choices[0].message.content

def get_context(self) -> list[dict]:

"""Get context including summary and recent messages."""

context = []

# Add summary if exists

if self.summary:

context.append({

"role": "system",

"content": f"Previous conversation summary: {self.summary}"

})

# Add recent messages

context.extend([

{"role": m.role, "content": m.content}

for m in self.messages

])

return context

class SummarizingChatbot:

"""Chatbot that summarizes long conversations."""

def __init__(self, system_prompt: str):

self.system_prompt = system_prompt

self.memory = SummaryMemory(recent_messages=10, summarize_threshold=20)

def chat(self, user_message: str) -> str:

self.memory.add("user", user_message)

messages = [{"role": "system", "content": self.system_prompt}]

messages.extend(self.memory.get_context())

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

assistant_message = response.choices[0].message.content

self.memory.add("assistant", assistant_message)

return assistant_messageVector-Based Long-Term Memory

import numpy as np

from typing import Optional

class VectorMemory:

"""Long-term memory using vector similarity."""

def __init__(self, max_memories: int = 1000):

self.memories: list[dict] = [] # {content, embedding, metadata}

self.max_memories = max_memories

def _get_embedding(self, text: str) -> list[float]:

response = client.embeddings.create(

model="text-embedding-3-small",

input=text

)

return response.data[0].embedding

def _cosine_similarity(self, a: list[float], b: list[float]) -> float:

a, b = np.array(a), np.array(b)

return np.dot(a, b) / (np.linalg.norm(a) * np.linalg.norm(b))

def store(self, content: str, metadata: dict = None):

"""Store a memory."""

embedding = self._get_embedding(content)

self.memories.append({

"content": content,

"embedding": embedding,

"metadata": metadata or {},

"timestamp": datetime.now()

})

# Trim old memories if needed

if len(self.memories) > self.max_memories:

self.memories = self.memories[-self.max_memories:]

def retrieve(self, query: str, top_k: int = 5) -> list[dict]:

"""Retrieve relevant memories."""

if not self.memories:

return []

query_embedding = self._get_embedding(query)

# Calculate similarities

scored = []

for memory in self.memories:

score = self._cosine_similarity(query_embedding, memory["embedding"])

scored.append((score, memory))

# Sort by score and return top-k

scored.sort(key=lambda x: x[0], reverse=True)

return [

{"content": m["content"], "score": s, "metadata": m["metadata"]}

for s, m in scored[:top_k]

]

class LongTermMemoryChatbot:

"""Chatbot with both short-term and long-term memory."""

def __init__(self, system_prompt: str, user_id: str):

self.system_prompt = system_prompt

self.user_id = user_id

self.short_term = ConversationMemory(max_messages=10)

self.long_term = VectorMemory(max_memories=500)

def chat(self, user_message: str) -> str:

# Add to short-term memory

self.short_term.add("user", user_message)

# Retrieve relevant long-term memories

relevant_memories = self.long_term.retrieve(user_message, top_k=3)

# Build context

memory_context = ""

if relevant_memories:

memory_context = "Relevant memories from past conversations:\n"

for mem in relevant_memories:

memory_context += f"- {mem['content']}\n"

messages = [

{"role": "system", "content": f"{self.system_prompt}\n\n{memory_context}"}

]

messages.extend(self.short_term.get_messages())

# Get response

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

assistant_message = response.choices[0].message.content

# Store in both memories

self.short_term.add("assistant", assistant_message)

# Store important information in long-term memory

self._extract_and_store_facts(user_message, assistant_message)

return assistant_message

def _extract_and_store_facts(self, user_msg: str, assistant_msg: str):

"""Extract and store important facts from conversation."""

# Use LLM to extract facts worth remembering

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "user",

"content": f"""Extract any important facts worth remembering from this exchange.

Include: user preferences, personal info shared, important decisions, key topics discussed.

Return each fact on a new line, or "NONE" if nothing important.

User: {user_msg}

Assistant: {assistant_msg}"""

}],

max_tokens=200

)

facts = response.choices[0].message.content

if facts.strip().upper() != "NONE":

for fact in facts.strip().split("\n"):

if fact.strip():

self.long_term.store(

fact.strip(),

metadata={"user_id": self.user_id, "type": "fact"}

)Entity Memory

from collections import defaultdict

class EntityMemory:

"""Track and update information about entities (people, places, things)."""

def __init__(self):

self.entities: dict[str, dict] = defaultdict(dict)

def update_entity(self, name: str, attributes: dict):

"""Update entity information."""

self.entities[name.lower()].update(attributes)

def get_entity(self, name: str) -> Optional[dict]:

"""Get entity information."""

return self.entities.get(name.lower())

def get_context_string(self) -> str:

"""Get all entities as context string."""

if not self.entities:

return ""

lines = ["Known entities:"]

for name, attrs in self.entities.items():

attr_str = ", ".join(f"{k}: {v}" for k, v in attrs.items())

lines.append(f"- {name}: {attr_str}")

return "\n".join(lines)

class EntityAwareChatbot:

"""Chatbot that tracks entities mentioned in conversation."""

def __init__(self, system_prompt: str):

self.system_prompt = system_prompt

self.conversation = ConversationMemory()

self.entities = EntityMemory()

def chat(self, user_message: str) -> str:

self.conversation.add("user", user_message)

# Extract entities from user message

self._extract_entities(user_message)

# Build context with entity information

entity_context = self.entities.get_context_string()

messages = [

{"role": "system", "content": f"{self.system_prompt}\n\n{entity_context}"}

]

messages.extend(self.conversation.get_messages())

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

assistant_message = response.choices[0].message.content

self.conversation.add("assistant", assistant_message)

return assistant_message

def _extract_entities(self, text: str):

"""Extract entities from text using LLM."""

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "user",

"content": f"""Extract entities (people, places, organizations, products) and their attributes from this text.

Format: entity_name|attribute1:value1|attribute2:value2

One entity per line. Return "NONE" if no entities found.

Text: {text}"""

}],

max_tokens=200

)

result = response.choices[0].message.content

if result.strip().upper() == "NONE":

return

for line in result.strip().split("\n"):

parts = line.split("|")

if len(parts) >= 2:

name = parts[0].strip()

attrs = {}

for part in parts[1:]:

if ":" in part:

key, value = part.split(":", 1)

attrs[key.strip()] = value.strip()

if name and attrs:

self.entities.update_entity(name, attrs)

# Usage

bot = EntityAwareChatbot("You are a helpful assistant that remembers details about people and things.")

bot.chat("My friend Sarah works at Google as a software engineer.")

bot.chat("She's been there for 3 years and loves it.")

print(bot.chat("What do you know about Sarah?")) # Should recall all detailsProduction Memory System

import json

from pathlib import Path

class ProductionChatbot:

"""Production-ready chatbot with persistent hybrid memory."""

def __init__(

self,

user_id: str,

system_prompt: str,

storage_path: Path = Path("./chat_memory")

):

self.user_id = user_id

self.system_prompt = system_prompt

self.storage_path = storage_path / user_id

self.storage_path.mkdir(parents=True, exist_ok=True)

# Initialize memory components

self.short_term = SummaryMemory(recent_messages=10)

self.long_term = VectorMemory()

self.entities = EntityMemory()

# Load persisted state

self._load_state()

def _load_state(self):

"""Load persisted memory state."""

state_file = self.storage_path / "state.json"

if state_file.exists():

data = json.loads(state_file.read_text())

self.short_term.summary = data.get("summary", "")

self.entities.entities = defaultdict(dict, data.get("entities", {}))

def _save_state(self):

"""Persist memory state."""

state_file = self.storage_path / "state.json"

data = {

"summary": self.short_term.summary,

"entities": dict(self.entities.entities)

}

state_file.write_text(json.dumps(data, indent=2))

def chat(self, user_message: str) -> str:

"""Process a chat message."""

# Add to short-term

self.short_term.add("user", user_message)

# Retrieve relevant long-term memories

relevant = self.long_term.retrieve(user_message, top_k=3)

# Build comprehensive context

context_parts = [self.system_prompt]

if self.entities.entities:

context_parts.append(self.entities.get_context_string())

if relevant:

context_parts.append("Relevant past information:")

for mem in relevant:

context_parts.append(f"- {mem['content']}")

messages = [{"role": "system", "content": "\n\n".join(context_parts)}]

messages.extend(self.short_term.get_context())

# Get response

response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

assistant_message = response.choices[0].message.content

# Update memories

self.short_term.add("assistant", assistant_message)

self._process_for_memory(user_message, assistant_message)

# Persist state

self._save_state()

return assistant_message

def _process_for_memory(self, user_msg: str, assistant_msg: str):

"""Extract and store information from the exchange."""

# Combined extraction prompt

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{

"role": "user",

"content": f"""Analyze this conversation exchange and extract:

1. FACTS: Important facts to remember (preferences, personal info, decisions)

2. ENTITIES: People, places, or things mentioned with their attributes

Format your response as JSON:

{{"facts": ["fact1", "fact2"], "entities": {{"name": {{"attr": "value"}}}}}}

User: {user_msg}

Assistant: {assistant_msg}"""

}],

max_tokens=300

)

try:

data = json.loads(response.choices[0].message.content)

# Store facts

for fact in data.get("facts", []):

self.long_term.store(fact, {"user_id": self.user_id})

# Update entities

for name, attrs in data.get("entities", {}).items():

self.entities.update_entity(name, attrs)

except json.JSONDecodeError:

pass # Extraction failed, skip

# Usage

bot = ProductionChatbot(

user_id="user_123",

system_prompt="You are a helpful personal assistant that remembers everything about the user."

)

# Conversation persists across sessions

print(bot.chat("Hi! I'm planning a trip to Japan in March."))

print(bot.chat("I prefer budget accommodations and love trying local food."))

# Later session - memories persist

bot2 = ProductionChatbot(user_id="user_123", system_prompt="...")

print(bot2.chat("What travel plans do I have?")) # Remembers Japan tripReferences

- LangChain Memory: https://python.langchain.com/docs/modules/memory/

- Mem0: https://github.com/mem0ai/mem0

- Zep: https://www.getzep.com/

- MemGPT: https://memgpt.ai/

Conclusion

Memory transforms chatbots from stateless responders into intelligent assistants that build relationships over time. Start with simple conversation buffers for short interactions. Add summary memory when conversations exceed context limits—it preserves important information while staying within token budgets. Implement vector-based long-term memory for recalling relevant past interactions. Track entities to maintain consistent understanding of people, places, and things mentioned across sessions. Persist memory state so users can return days later and continue naturally. The best chatbots feel like they know you—they remember your preferences, reference past conversations appropriately, and build on previous interactions. This requires thoughtful memory architecture, but the result is dramatically better user experience.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.